I have a rather large folder (2½TB) that I need to sync from a ReadyNAS to an RPi 3 (10TB USB drive on the RPi). Those devices are named BACKUP and OFFSITE, respectively.

This is an ongoing saga:

With @gadget’s gracious assistance I made it through syncing my Dev folder, which contains tens upon tens of thousands of small 1-2 kilobytes files. That mountain has been scaled.

Next up is my Files folder, which contains more and larger files.

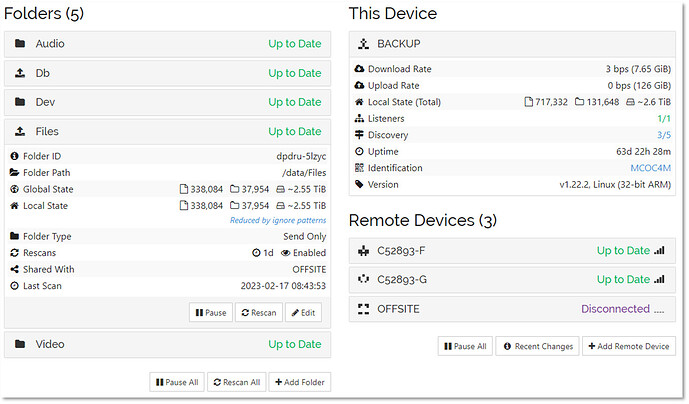

FYI here’s the GUI on BACKUP, as I now can’t get Syncthing to start at all on OFFSITE:

The long-term problem is that Syncthing keeps crashing every few minutes while in the state ‘Preparing to Sync.’ I’ve discovered some conversation surrounding this over here, but I don’t know how to find out whether the issues discussed there apply to my own situation. And even if they do, I’m afraid the troubleshooting steps are a bit above my pay grade.

The reason it won’t start at all right now is because I’m attempting to move its configuration and database to the USB drive, as advised here.

Here’s the original home path from my service config:

ExecStart=/usr/bin/syncthing -no-browser -no-restart -logflags=0 -home=/home/admin/.config/syncthing

…and here’s what I changed it to, after running sudo rsync -r /home/admin/.config /media/pi/Elements:

ExecStart=/usr/bin/syncthing -no-browser -no-restart -logflags=0 -home=/media/pi/Elements/.config/syncthing

Here’s what systemctl status reports:

pi@OFFSITE:/media/pi/Elements $ sudo systemctl status syncthing@admin.service

* syncthing@admin.service - Syncthing service for admin

Loaded: loaded (/etc/systemd/system/syncthing@admin.service; enabled; vendor preset: enabled)

Drop-In: /etc/systemd/system/syncthing@admin.service.d

`-override.conf

Active: failed (Result: exit-code) since Fri 2023-02-17 17:35:29 AKST; 1min 57s ago

Process: 64338 ExecStartPre=/home/pi/Documents/Scripts/mount-sync-drive.sh (code=exited, status=0/SUCCESS)

Process: 64340 ExecStart=/usr/bin/syncthing -no-browser -no-restart -logflags=0 -home=/media/pi/Elements/.config/syncthing (code=exited, status=1/FAILURE)

Main PID: 64340 (code=exited, status=1/FAILURE)

CPU: 74ms

Feb 17 17:35:29 OFFSITE systemd[1]: Starting Syncthing service for admin...

Feb 17 17:35:29 OFFSITE systemd[1]: Started Syncthing service for admin.

Feb 17 17:35:29 OFFSITE syncthing[64340]: WARNING: Failure on home directory: mkdir /media/pi/Elements/.config/syncthing: permission denied

Feb 17 17:35:29 OFFSITE systemd[1]: syncthing@admin.service: Main process exited, code=exited, status=1/FAILURE

Feb 17 17:35:29 OFFSITE systemd[1]: syncthing@admin.service: Failed with result 'exit-code'.

I’m not sure why it’s trying to create that syncthing directory, as the directory already exists. I’ve verified it manually, as well as checked its permissions against the original.

This whole thing sure is turning out to be a headache. I blame it on the skimpy resources of the RPi3.

Is there a way to tell Syncthing on the RPi to flush its memory buffers more often, so that it won’t crash every 5-10 minutes?