I’ve made a quick 30-minutes sketch to test. Here are my findings. This is C#, straightforward to read.

database 1:

CREATE TABLE IF NOT EXISTS names (

id INTEGER PRIMARY KEY AUTOINCREMENT NOT NULL,

name TEXT NOT NULL COLLATE BINARY

)

STRICT;

CREATE UNIQUE INDEX IF NOT EXISTS names_name ON names (name);

database 2:

CREATE TABLE IF NOT EXISTS names (

id INTEGER PRIMARY KEY AUTOINCREMENT NOT NULL,

parent_id INTEGER NOT NULL,

name TEXT NOT NULL COLLATE BINARY

)

STRICT;

CREATE UNIQUE INDEX IF NOT EXISTS names_parent_id_name ON names (parent_id,name);

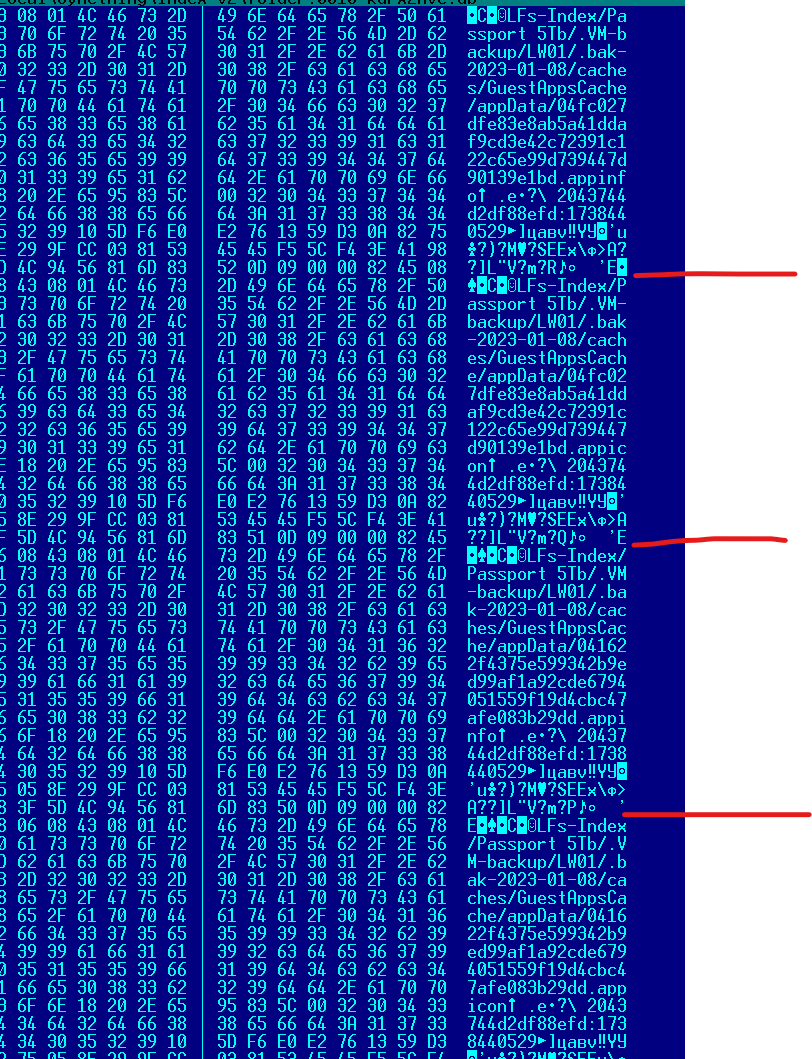

all was filled with my projects folder, using simple dir walk, 180K files, 20K folders. Projects. Like syncthing project, approx the same hierarchy, as usual.

Database1: 81 MB, Database2: 20 MB.

Then I did the query code.

database 1:

static object Query1 (string path)

{

SqliteCommand c;

c = conn1.CreateCommand ();

c.CommandText = "SELECT id FROM names WHERE name=@name";

c.Parameters.AddWithValue ("name", path);

return c.ExecuteScalar ();

}

database 2:

static object Query2b (string path)

{

var path_tokens = path.Split ('\\');

SqliteCommand c = conn2.CreateCommand ();

StringBuilder ct = new StringBuilder (500);

var reference_table_level = "ll";

for (int t = 1; t < path_tokens.Length; t++)

{

ct.AppendFormat (" INNER JOIN names AS l{0} ON {1}.id = l{0}.parent_id AND l{0}.name=@name{0}\n", t, reference_table_level);

c.Parameters.AddWithValue (string.Format ("name{0}", t), path_tokens[t]);

reference_table_level = string.Format ("l{0}", t);

}

ct = new StringBuilder (string.Format ("SELECT {0}.id FROM names AS ll\n{1}", reference_table_level, ct));

ct.Append ("WHERE ll.name=@name AND ll.parent_id=0");

c.Parameters.AddWithValue ("name", path_tokens[0]);

c.CommandText = ct.ToString ();

return c.ExecuteScalar ();

}

then the benchmark:

started = DateTime.Now;

for (int i = 0; i < 10000; i++)

{

Query1 (@"C:\Projects -common-\Arduino\DKVM1-STM32F103C6T6\Debug\Drivers\STM32F1xx_HAL_Driver\Src\stm32f1xx_hal_dma.d");

}

Console.WriteLine ("Elasped: {0}", DateTime.Now - started);

started = DateTime.Now;

for (int i = 0; i < 10000; i++)

{

Query2b (@"Projects -common-\Arduino\DKVM1-STM32F103C6T6\Debug\Drivers\STM32F1xx_HAL_Driver\Src\stm32f1xx_hal_dma.d");

}

Console.WriteLine ("Elasped: {0}", DateTime.Now - started);

and the repeatable results:

Elasped: 00:00:00.6381574

Elasped: 00:00:01.4530019

Twice the time. But I was causing NO any memory or cache contention to speculate that database which is 2x slower is now 4x smaller. Imagine we are looking into this index with limited page cache or RAM.

Will it benefit or not? Up to you. But things are not like “a disaster” going this way. And in a lot of real world scenarios, it will be just a lot faster with a smaller DB. Just for your reference.

Going to hash-index will also make it like additionally even more twice smaller, without any serious change in query performance. Can check that if you are interested.

UPD: this query inside -

SELECT l7.id FROM names AS ll

INNER JOIN names AS l1 ON ll.id = l1.parent_id AND l1.name=@name1

INNER JOIN names AS l2 ON l1.id = l2.parent_id AND l2.name=@name2

INNER JOIN names AS l3 ON l2.id = l3.parent_id AND l3.name=@name3

INNER JOIN names AS l4 ON l3.id = l4.parent_id AND l4.name=@name4

INNER JOIN names AS l5 ON l4.id = l5.parent_id AND l5.name=@name5

INNER JOIN names AS l6 ON l5.id = l6.parent_id AND l6.name=@name6

INNER JOIN names AS l7 ON l6.id = l7.parent_id AND l7.name=@name7

WHERE ll.name=@name AND ll.parent_id=0

UPD: with name4 approach (and another random dataset),

Full names, unique index, Elasped: 00:00:00.7169035

Level names, indexed, Elasped: 00:00:01.7745987

Leval names, name4 int32 hash index only, Elasped: 00:00:02.4254728

name4 is like that,

CREATE TABLE IF NOT EXISTS names (

id INTEGER PRIMARY KEY AUTOINCREMENT NOT NULL,

parent_id INTEGER NOT NULL,

name4 INTEGER NOT NULL,

name TEXT NOT NULL COLLATE BINARY

)

STRICT;

CREATE UNIQUE INDEX IF NOT EXISTS names_parent_id_name4 ON names (parent_id,name4);

and the query example is

SELECT l7.id FROM names AS ll

INNER JOIN names AS l1 ON ll.id = l1.parent_id AND l1.name=@name1 AND l1.name4=@nameHash1

INNER JOIN names AS l2 ON l1.id = l2.parent_id AND l2.name=@name2 AND l2.name4=@nameHash2

INNER JOIN names AS l3 ON l2.id = l3.parent_id AND l3.name=@name3 AND l3.name4=@nameHash3

INNER JOIN names AS l4 ON l3.id = l4.parent_id AND l4.name=@name4 AND l4.name4=@nameHash4

INNER JOIN names AS l5 ON l4.id = l5.parent_id AND l5.name=@name5 AND l5.name4=@nameHash5

INNER JOIN names AS l6 ON l5.id = l6.parent_id AND l6.name=@name6 AND l6.name4=@nameHash6

INNER JOIN names AS l7 ON l6.id = l7.parent_id AND l7.name=@name7 AND l7.name4=@nameHash7

WHERE ll.name=@name AND ll.name4=@nameHash AND ll.parent_id=0

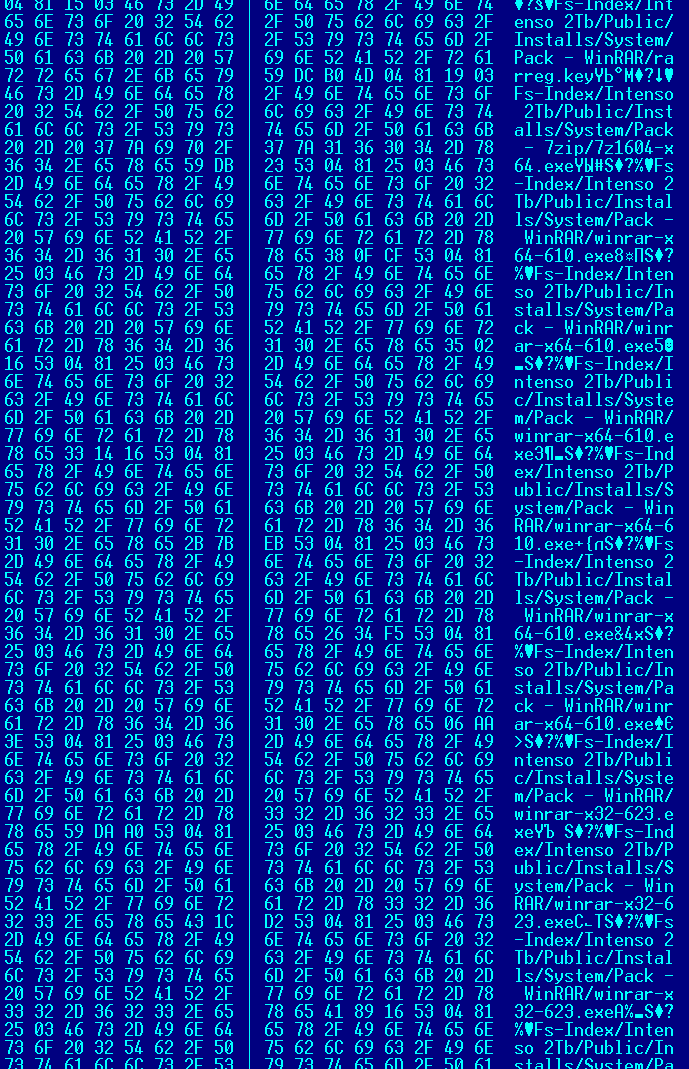

and DB indexes stats,

full names -

*** All indices ***************************************************************

Percentage of total database...................... 52.7%

Number of entries................................. 288816

Bytes of storage consumed......................... 33542144

Bytes of payload.................................. 28642729 85.4%

Bytes of metadata................................. 986147 2.9%

level names -

*** All indices ***************************************************************

Percentage of total database...................... 53.1%

Number of entries................................. 288816

Bytes of storage consumed......................... 13647872

Bytes of payload.................................. 11012717 80.7%

Bytes of metadata................................. 906433 6.6%

level + name4 hashed -

*** All indices ***************************************************************

Percentage of total database...................... 29.1%

Number of entries................................. 288815

Bytes of storage consumed......................... 5533696

Bytes of payload.................................. 3976139 71.9%

Bytes of metadata................................. 882653 16.0%