Does a new database layer (or any of the changes in the v2 branch) even warrant a major version bump?

According to Versions & Releases: Major, Minor, or Patch:

We decide the version number for a new release based on the following criteria:

- Is the new version protocol incompatible with the previous one, so that they cannot connect to each other or otherwise can’t sync files for some reason? That’s a new major version. (This hasn’t happened yet.)

- Are there changes in the REST API so that integrations or wrappers need changes, or did the database schema or configuration change so that a downgrade might be problematic? That’s a new minor version.

- If there are no specific concerns as above, it’s a new patch version.

AFAIK you can sync v2 devices with v1 devices, so it’s seems to me like it shouldn’t be a new major version, or the docs are out of date.

Please see this post for a reasoning on why this is v2:

I’ve got another successful migration story. This is one of my ultimate tests - Intel Atom Z3740D paired with 2 GB of RAM and slow eMMC storage. It runs Windows 10 x86 (with x64 not supported due to 32-bit UEFI). Previously, when trying to migrate it, I experienced a lot of crashes due to running out of memory.

This time, everything has gone very smoothly. The migration took just 1 minute. The device doesn’t sync much, so this is probably expected, but still, last time it was much slower.

[start] 2025/04/17 08:48:26 INFO: syncthing v2.0.0-beta.9.dev.16.g6254b066-tomasz86-v2.0.0-beta.9 "Hafnium Hornet" (go1.24.2 windows-386) tomasz86@tomasz86 2025-04-17 06:41:30 UTC [noupgrade, stnoupgrade]

[start] 2025/04/17 08:48:26 INFO: Migrating old-style database to SQLite; this may take a while...

[start] 2025/04/17 08:48:27 INFO: Migrated folder 5eief-x9lna; 26 files and 0k blocks in 0s, 225.6 files/s

[start] 2025/04/17 08:48:37 INFO: Migrating folder abwgi-qj5iw... (12000 files and 0k blocks in 10s, 1198.5 files/s)

[start] 2025/04/17 08:48:47 INFO: Migrating folder abwgi-qj5iw... (24000 files and 0k blocks in 20s, 1198.7 files/s)

[start] 2025/04/17 08:48:48 INFO: Migrated folder abwgi-qj5iw; 25950 files and 0k blocks in 21s, 1187.9 files/s

[start] 2025/04/17 08:49:01 INFO: Migrated folder axfiw-stvqt; 7792 files and 19k blocks in 12s, 626.5 files/s

[start] 2025/04/17 08:49:07 INFO: Migrated folder hqzau-rtdrn; 4002 files and 4k blocks in 6s, 636.2 files/s

[start] 2025/04/17 08:49:10 INFO: Migrated folder j2kgm-h9kws; 329 files and 14k blocks in 3s, 107.8 files/s

[start] 2025/04/17 08:49:10 INFO: Migrated folder mgieg-z3mzc; 80 files and 0k blocks in 0s, 418.3 files/s

[start] 2025/04/17 08:49:15 INFO: Migrated folder ofxx9-nqyuz; 4978 files and 0k blocks in 4s, 1144.3 files/s

[start] 2025/04/17 08:49:15 INFO: Migrated folder p4qoa-r9bwd; 63 files and 0k blocks in 0s, 546.6 files/s

[start] 2025/04/17 08:49:15 INFO: Migrated folder w9h6p-vpccd; 21 files and 0k blocks in 0s, 286.7 files/s

[start] 2025/04/17 08:49:18 INFO: Migrated folder wqpwv-jpr97; 965 files and 5k blocks in 2s, 342.2 files/s

[start] 2025/04/17 08:49:31 INFO: Migrating folder xicj7-mw4ht... (5000 files and 12k blocks in 12s, 388.1 files/s)

[start] 2025/04/17 08:49:36 INFO: Migrated folder xicj7-mw4ht; 8212 files and 16k blocks in 18s, 449.7 files/s

[start] 2025/04/17 08:49:36 INFO: Migrating virtual mtimes...

[start] 2025/04/17 08:49:36 INFO: Migration complete, 52418 files and 62k blocks in 1m9s

What is more important is that the RAM usage was only 100 MB at the maximum the whole time, which prevented Syncthing from running into any out of memory issues ![]() .

.

On a side note, I also want to confirm that I still experience the issue described in https://forum.syncthing.net/t/syncthing-on-sqlite-help-test/23981/196, where folders with many files sync extra slowly when downloading files for the first time. The problem happens universally regardless of the hardware.

For the time being, on the device in question, I “worked around” it by manually copying the folder in advance, so that both sides had all the files. This way, Syncthing just scanned and compared the two, marking both sides “Up to Date”, without having to actually sync anything.

Edit:

I’ve now upgraded almost all my devices to Syncthing v2. For the most part, synchronisation works with zero issues. Unfortunately, from time time, I still get hit by the problem described above.

However, I’ve also tried to pay more attention to find any recurring patterns, and what I can say is that for me, it appears that Syncthing gets stuck in some kind of a loop or similar.

For example, I’ve just had a case of a folder that was stuck for 2 hours or so, unable to download just ~500 MB of data from another local device. During those 2 hours, the RAM usage kept growing, and it was also hammering the Ryzen 9950X CPU like crazy. In fact, the reason why I noticed that something had been wrong in the first place was due to the CPU running super hot. I then decided to kill Syncthing completely and restart it. After doing so, the very same folder synced almost immediately.

Did you happen to have done any profiles (cpu or heap) during that time?

Unfortunately not, but the issue seems to repeat itself every few hours, so I should be able to provide the profiles later on. For the record, it seems either the same or very similar to what I had experienced on another device in https://forum.syncthing.net/t/syncthing-on-sqlite-help-test/23981/196, which has a lot of profiles attached.

The difference is that previously, the file counter was still moving, but now, it seems getting completely stuck, still doing something though, as indicated by the growing RAM and CPU usage. In that state, I’ve also tried to go through all debug logging, but I couldn’t see anything suspicious there.

That’d be good. I had a quick look at the mem. and cpu profiles in that post, and memory usage is huge and unexpectedly for filesystem stuff - maybe that’s a bug fixed in beta 10? And cpu usage is in sqlite C mostly, while looking for blocks that exist locally (AllLocalFilesWithBlocksHash). Neither seems obviously related to what you are describing now (but I might just not be seeing it).

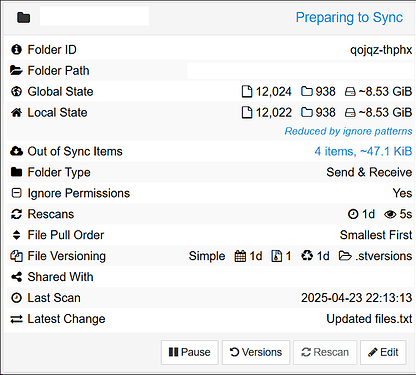

This took longer than expected, but I’ve now got another folder stuck in this never-ending “Preparing to Sync” state. The RAM usage isn’t extremely high (at around 1.7 GB), but the CPU is being hammered for no apparent reason. This is with basically all other folders just sitting idle.

I’m attaching profiles and other files from the support bundle below:

- syncthing-block-windows-amd64-v2.0.0-beta.9.dev.16.g6254b066-tomasz86-v2.0.0-beta.9-223717.pprof (65.1 KB)

- syncthing-cpu-windows-amd64-v2.0.0-beta.9.dev.16.g6254b066-tomasz86-v2.0.0-beta.9-223717.pprof (23.1 KB)

- syncthing-goroutines-windows-amd64-v2.0.0-beta.9.dev.16.g6254b066-tomasz86-v2.0.0-beta.9-223713.pprof (11.6 KB)

- syncthing-heap-windows-amd64-v2.0.0-beta.9.dev.16.g6254b066-tomasz86-v2.0.0-beta.9-223713.pprof (439.0 KB)

- connection-stats.json.txt (3.1 KB)

- usage-reporting.json.txt (4.2 KB)

- version-platform.json.txt (375 Bytes)

I’d be very thankful if you could find some time to look at them and let me know if there’s anything obvious in there, since I’d really prefer not to downgrade to LevelDB again, but if this continues, I may have to, as I really need these files to sync with no interruptions.

Yeah ok, I told you to redo this for nothing apparently: Same pattern. And my beta 9 vs 10 comment seems pointless too, at least I don’t see any relevant changes between the two versions. Anyway, this time I did have a look at the code. And I found a “nice” bug that explains the heap usage in filesystem (teaser: v2 doesn’t use function iterator, but kept defers). I’ll send a PR shortly. Not sure if this explains the overall problem/high cpu though. I have some unfinished/somewhat rambling thoughts on that, but I need to call it a day here - maybe they are useful to someone (kinda doubt it ![]() ):

):

The code that’s using the cpu is part of the copierRoutine:

It is called within a loop on the results of AllLocalBlocksWithHash. Maybe “normally” these results are short and/or the loop is exited quickly (block successfully copied locally), but in some (edge) cases that doesn’t happen, and you hit that. Seems kinda plausible that there’s a scenario where a lot of files have the same block. And if you pair that with all of those files somehow not being accessible, that would cause this. And unfortunately there’s no logging there, resp. only a single debug log for hash verification failure. Filesystem debug logging would show it though, but also is super verbouse as usual - if we investigate further down that route, adding model debug logging seems more helpful.

Gotta stop here for now, lets see what the initially bugfix does (will link ones PRed).

Edit: While trying to fix that bug, I think I found another one, and that seems even more plausible to contribute to lots of cpu. I don’t think there’s any need to investigate further until we see the effects of those fixes (if they are fixes, lets hope so).

Edit2: More layers of confusion - I am both to uncertain about my understanding and too tired to continue a fix of all layers.

Edit3: Lazy fix of one issue, some chance it’s the biggest issue: Fix loop-break regression while block copying in puller by imsodin · Pull Request #10069 · syncthing/syncthing · GitHub

Now it’s definitely way past time I get my sleepzzz

Thanks a lot for the investigation! ![]()

I left it over night on purpose just to see if anything changes, but after 7+ hours, the folder is still stuck in the very same state. I’ll now cherry-pick the commit into my build I’ll upgrade to beta.11 and see if it fixes the issue.

Edit:

I just wanted to confirm that after using the fixed version for about 1 week now, I haven’t experienced any further issues. This is with Syncthing v2 installed on 11 devices - 8 running Windows 10/11 x64, one running Windows 10 x86, and two running Android arm64.

I was about to ask if you still see issues - I missed the edit. Nice to read that it works fine now for you.

And I now finally have the other fix/change I mentioned before based on your profiles. And it turns out it’s also a pretty bad bug for large sync/pull operations, so if you did still have issues (apparently not ![]() ), this would have likely helped: fix(model): Close fd immediately in copier by imsodin · Pull Request #10079 · syncthing/syncthing · GitHub

), this would have likely helped: fix(model): Close fd immediately in copier by imsodin · Pull Request #10079 · syncthing/syncthing · GitHub