I’ve been working on migrating the Syncthing database from leveldb to SQLite, and it’s reached the point where I would like to ask for help in testing.

Why

The database layer has been a murky corner for a long time, a source of real or potential bugs both in our code and the now unmaintained leveldb database code. Leveldb lacks things like actual transactions and our maintaining indexes ourselves has been a source of inconsistencies. We see a lot of odd crashes in the database layer.

The new database layer uses a SQLite database, with tables for files, folders, size accounting, etc. These are relational and indexed with proper keys and foreign keys. Metadata is maintained by triggers that execute in the same transaction as the change itself. There are a lot of changes all over the place to accommodate this.

How you can help

Run the new testing release on one or more of your devices. See how it works and which new problems show up. When you do see problems, be prepared to share logs, screenshots and a full copy of the database – with me, if not with the entire world.

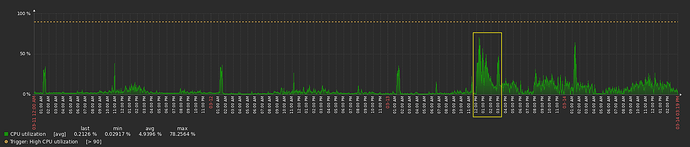

I’ve run it in my home setup for a couple of weeks (one Mac, one amd64 Linux server and a Raspberry Pi). It hasn’t eaten any of my data as far as I can tell and I don’t think it will eat your data either, but I can’t guarantee it. I don’t use all of Syncthing’s features and haven’t tested everything. Make sure you have a backup if you run it on important data.

What you can expect

It’s best to do the migration when everything is in sync and quiescent.

On first startup Syncthing will convert the existing database to SQLite. This can take quite a while. The old database is renamed to index-v0.14.0.db.migrated. When it next connects to devices it will exchange full indexes with everyone. This can also take quite a while and things will possibly look out of sync for the duration. This will happen for every migrated device.

Some things are slower in the new system. One advantage of a simple key-value database like leveldb is that inserts and changes are incredibly fast. Now, changes entail triggers, multiple indexes to update, and transactions.

Some other things are quite a lot faster, for example listing the out of sync files for a remote device, which now uses indexes instead of a full database traversal in leveldb.

How to revert to the old system

Make sure your files are in sync, as far as possible. Shut down the SQLite version, rename index-v0.14.0.db.migrated back to index-v0.14.0.db, start again. You might get a few sync conflicts but hopefully it’s not too bad.

Let’s go!

Here are builds: Release SQLite preview 1 · calmh/syncthing · GitHub. There is also a Docker image at ghcr.io/calmh/syncthing:v2.0.0-beta.1.

The Windows and macOS binaries are not code signed unfortunately, so may be a little more annoying than usual to get to run.

“2.0.0?!” Yes, for now at least. It prevents auto upgrade back to non-SQLite versions, and if/when this gets into mainline, it prevents auto upgrade from previous versions into SQLite.

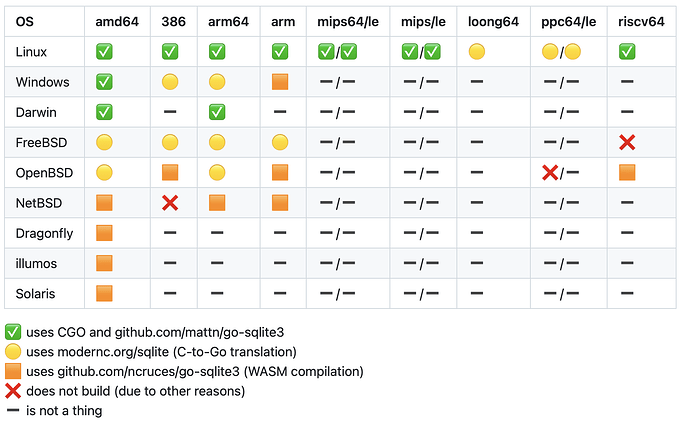

There are several reasons we might want to not do auto upgrades from previous versions. This table is one of them:

Building Go with SQLite is nontrivial, as SQLite is not written in Go. We can build and include the C library, which is what happens in the green checkbox scenarios. That gives us a binary that now depends on libc, which we didn’t use to. That means it may not run on some systems that could previously run Syncthing. There is also a translation of the C SQLite source into Go, along with a significant part of the C runtime libraries, which is what you get in the yellow circle scenarios. That’s a marvelous machine that I’m amazed it works at all, but it actually does seem to. It doesn’t support all platforms though, and there is a third alternative, a WASM compilation of SQLite…

Currently 95-something percent of users are covered by the green builds. Adding windows/386, which is possible with some effort, would bring that up to 99%. For the other platforms I think it’s good that we provide a functioning binary, but ideally they would get platform native builds from their package managers. It’s not that it’s impossible to build SQLite with C for NetBSD for example, it’s just that it’s tricky to do without a NetBSD build machine.