Hi, when accessing remotely Syncthing I don´t see any info regarding the number of files, synced files, etc. Locally it is OK, any advice?

Show a screenshot, please.

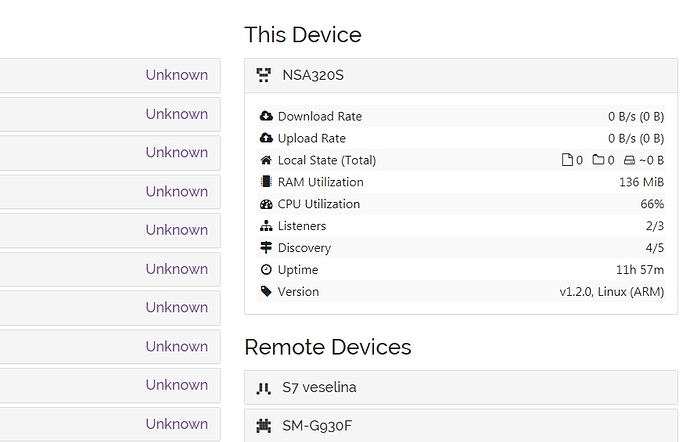

Thanks for looking into this, just a couple of things: the web interface of my NAS device is not showing the stats and I have to add or remove shares at least 4 or 5 times in order to have them “remembered”. Also, the sync is working, but half of the files are synched, the rest stay in .tmp state. Something is definitely wrong. Here is the screenshot:

By the way, locally also not working, not only the web GUI. Now it is showing the info, but I always have failed items. I think the best way to proceed will be to somehow reset the syncthing in my NAS, any advice? Thanks, this is driving me nuts.

The web UI not showing the info looks like a performance issue (66% CPU util), which is not that uncommon on (usually) low-powered NAS. If there is a huge sync in progress (which is likely given you write about lots of tmp files), you’ll probably just have to wait. If you do get failed items specified, there’s a reason given for why they are failed, so start there. Otherwise if web UI is unresponsive check the logs for info.

Hello:

For the record, I’m seeing something similar since 1.2.0 came out, across multiple (but not all) Synology NAS units I have running Syncthing: it seems like Syncthing stalls - often with unusually low CPU usage - requiring a restart of the package. Then, again, Syncthing seems to stall a day or two later.

In this state, the web UI never fully populates, and I can’t grab a support bundle to send. I’ve got it on my list to set Syncthing logging and see what’s going on - but I haven’t yet had a chance to do so.

Before 1.2.0 was released, I’d never seen this issue (other than when the NAS is taking a long time to populate the UI because of performance issues - but this is different).

I will grab more info when I can.

Thanks.

Once it stalls send a SIGQUIT signal to it, can capture it’s stdout (probably goes to a log) which will have the stacktraces.

Thanks for reporting and bug tracking!

How many files do you have in Syncthing?

I want to chime in here as well.

At the moment I’m syncing 850GiB of roughly 4500 media files (mostly audio/video) between my current Synology NAS (DS918+) and a MacBook Air used as HTPC on the one side, and my old one (DS411slim) on the other side. Both NAS boxes are on the same DSM 6.2 and all 3 machines are running syncthing 1.2.0 from the stable update branch. My complete syncthing cluster consists of 3 to 6 active machines on my local LAN sharing 1TiB of data in 8 sync folders. Relays etc. are active on all machines since I sync to an additional server outside my LAN for backup purposes, but that machine and the other clients aren’t involved here and don’t share the folder with my media files, but they all connect to both NAS boxes for other folders.

Typically it would take about 7 hours to copy that amount of data to the old DS411slim NAS. However syncing it via syncthing will probably take 18days at the current rate. I’m at 52% right now and basically keep it up to see what happens in the end. Right now I notice that the syncthing instance running on the DS411slim starts disconnecting other devices after roughly 18 to 28 hours until no connection is left and syncing stops. The NAS GUI itself is still operating slow but normally. The other services running on the NAS seem to work as well. In that state it is faster to just reboot the NAS than logging into the syncthing Web GUI and restarting the service there. I didn’t try it via SSH since I have it disabled on that machine for security reasons. Checking logs didn’t really yield any information about why it doesn’t see the other machines anymore.

The syncthing instance on the DS411slim is also constantly swapping since the required amount of RAM for the sync job is much bigger than what DSM 6.2 allows apps/services to access. The syncthing GUI fluctuates between 130MiB and 400MiB. The NAS itself has 256MiB physical RAM, but happily swaps to the RAID 5 consisting of 4x 1TB HDDs. Most background services like Antivirus, VideoStation, iTunes server, etc. are stopped to minimize access to the RAID.

It seems to me that there might be a memory leak or something along those lines involved with v1.2.0, because prior initial sync jobs of new folders with many more but smaller files (60k) and many more subdirectories (6k) seemed to work fine. They were slow, but the disconnects between nodes were only temporary.

Maybe there should be some awareness about available system memory build into syncthing in the future or at least some warning in the GUI that syncthing isn’t really supported or a good idea on low memory devices like those old ARM-based NAS boxes.

If there is a memory leak that should of course be fixed. However,

You are simultaneously at the high-ish end of usage (95% of users have < 700 GiB) and low end of capacity (95% of users have 1 GiB of RAM or more) so the combo is a bit outside our optimization envelope. (Data from https://data.syncthing.net, the percentiles stuff.)

We have an official goal of running on even old or low-end stuff – though not necessarily with the biggest folder sizes. Traditionally phones were the low-end platform, but even those now have a magnitude more RAM than that.

NAS appliances are traditionally some of the most underpowered devices around and one of those things where I’ve personally sort of given up making things works smoothly. Apart from RAM size this is where you’re likely to find oddball SoC 32bit CPUs and whatnot. They’re even sort of explicitly excluded (point 5) from the above goal. ![]()

So, I get that this is a longish way of saying “you’re holding it wrong”. Sorry about that. If it works, it works.

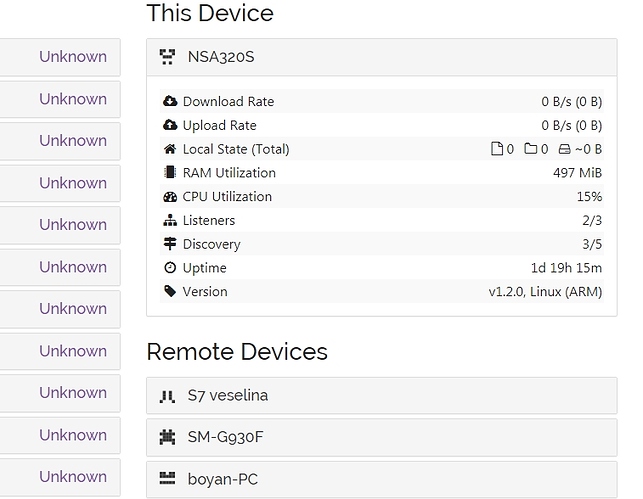

Wow, lots of replies, thanks. I also think this is related to a low RAM in my Zyxel NAS (low level piece of crap, NSA320S is the exact model). Anyway, as far as the advice to wait when synching a big folder: this whole thing started after I tried to sync a 70 gb folder, I waited for 5 days and for some reason it did not sync. However, I think there is a specific problem: I tried to make a single folder for uploading pictures from 4 different phones. I had to give up, I think this screwed up the whole sync process.

Hello:

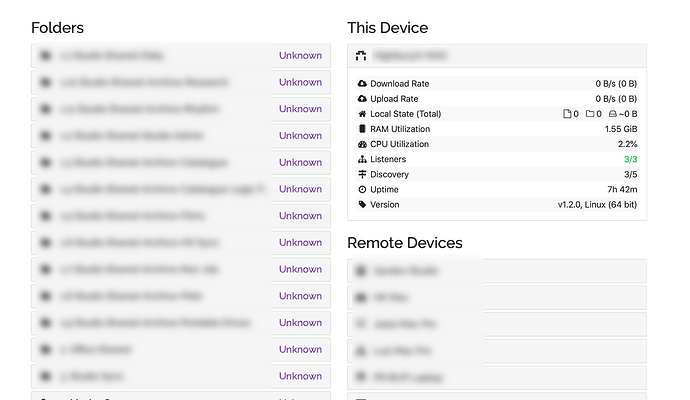

Ok - this has happened on one of my machines again. Here’s the UI:

The unit is reporting about 50% RAM free - and traditionally when it has gone over, Syncthing has been killed and restarted as expected.

2% CPU usage is much less than it would normally be on this unit - typically around 20-40%.

The uptime is much less than expected - should be multiple days, rather than 7h.

It’s got about 4 million files set up; a mirror unit (same NAS, same configuration - though some files have yet to transfer - it’s currently got 3.5m files) has the same setup - but doesn’t seem to be affected by this issue.

I’ve yet to capture SIGQUIT output - will post that as soon as I can.

Hope that’s some useful initial pointers…

Stacks from sigquit are great. However the reason for uptime and what was the last thing it was doing can also be obtained through “just” normal logs.

Same thing here, I keep getting an unpopulated list and unknown status of the shares while the CPU usage is quite low.

Get a dump from sigquit please

Ok - I’m trying this - but not seeing any output. Apologies if I’m being naive but I’m sending the following:

kill -3 <process_number_here> > log/path/here

The process appears to stop as expected - but I’m not seeing any output from the SIGQUIT…

The output is from the killed process, not the kill command itself.

Thanks - my misunderstanding!

At the moment I have Syncthing in a stalled state, but with no logging currently enabled. I presume it’s not possible to start logging in preparation for the SIGQUIT?

If logging currently goes to nowhere, no, I don’t think so.

Ok - thanks. I’ll get it restarted with logging running, and will report back.

Are there any specific STTRACE variables that might be useful for producing a valid output (as far as you can tell with such little information so far…  )?

)?