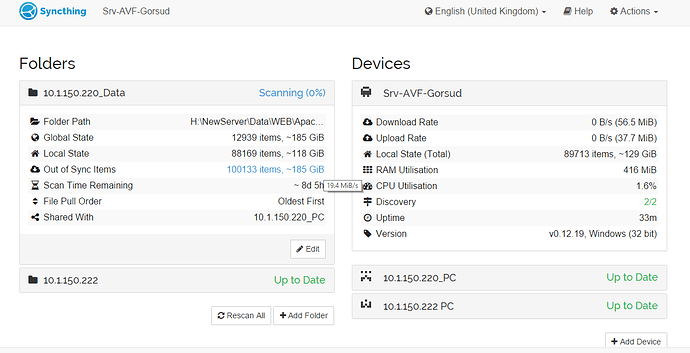

Hello! I use Synstning to synchronization from many PC - into one (as video backup). At the same time on the main host PC data volume up to 13Tb, and Sync freeze as at screen:

I use Master’s folder on PCs (donot need download, only upload) Now I added only 2 PCs, but they will be about 100… Maybe there is a better way to collect from multiple PCs to one?The screen shot suggest the scan will take 8 days. There will not be any synching until the initial scan completes.

As Syncthings CPU and RAM usage isn’t high it seems that your HDD can only read at less than 20MiB/s.

Also you are either not using a server OS or using a really old and unsupported one, as it is 32Bit.

I can almost guarantee this will not work with 32-bit Syncthing - it will run out of addressable memory when processing data in the hundreds of gigabytes range. I tried 32-bit Syncthing on a ~350GB data set and there was nothing I could do to resolve it. Understandable, considering the magnitudes of data involved.

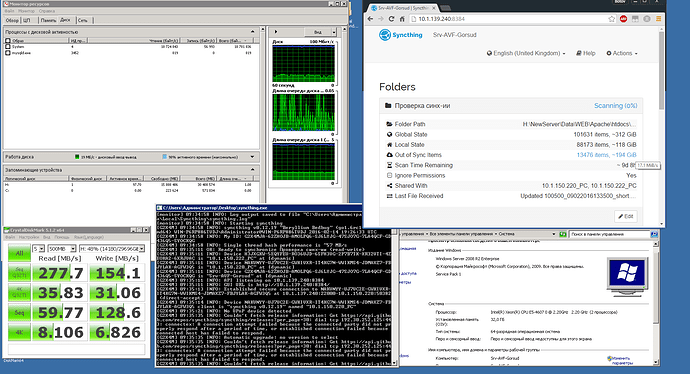

Thanks for the answer! Now I try use x64 version, but the situation has not changed. And I do not see the load on the drive from Syncthing…

It says that your H disk is busy 97.7% of the time, so your bottleneck is your disk.

Maybe try to limit the number of hashers. I think the default setting is to use all the cores but with normal hard dives this can negativity impact performance so try setting the hashers to 3 and see if that will make a difference in the hashing speed.

Actions - > Advanced -> FolderName -> Hashers

@fpbard Yes! Scaning Speed grow up to 40mb\s =) What is the maximum value can be set Hashers?

In your case setting it to 1 might be better than setting it to something higher. There is no maximum number, yet having more of them will cause more seeking.

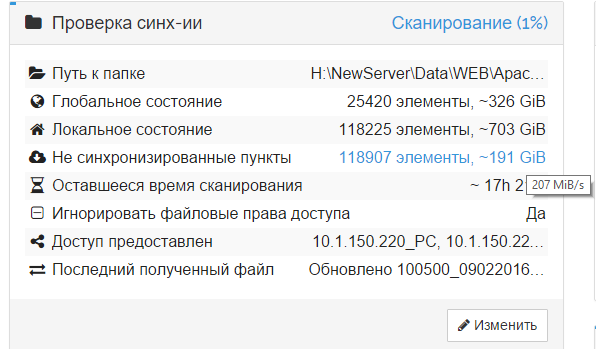

@AudriusButkevicius, I try set Hashers to 10, and speed grow up to 200Mb\s!

But when I set it to 1 speed was 30Mb\s.

So… I have another question. I need to collect data on 300-400Gb with 100 computers to the same folder on the server. How to do, add 100 folders on the server, which refer to one place, or do one and add it to all 100 PCs?

Do one, and add it on 100pcs. But you might want to look for a different topology as the server with 100 devices will have a large job todo.

You might want to do some snowflake topology.

how to understand [quote=“AudriusButkevicius, post:11, topic:6830”]

different topology

[/quote]

eg: I have 3 PC and 1 Server.

First PC have folder A, 2 PC have folder B, 3 PC - C.

I want to be on the server in folder ‘Data\A’, ‘Data\B’ and ‘Data\C’, at the same time that computers are not charged to other folders. Like one-way sync…

We moved a bit from the original post. You will need individual shares on the server for each PC to share it’s folder to. Since you are not sharing any of the work with other nodes the server is doing a lot of work in your example. Have you considered something like Borg Backup? What you describe is not really what syncthing is designed for.

Thanks I got it!

Borg Backup not exactly what I need windose implementation, deploy cygwin and install Borg on more then 100 PC is difficult…

Thank you very much for your answers!

I can almost guarantee this will not work with 32-bit Syncthing

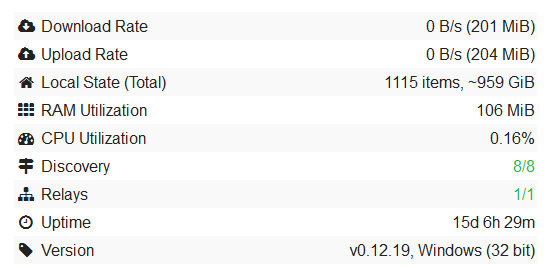

I beg to differ. I’m using Syncthing on an Atom 230 with 2Gb ram running Windows Server 2008 32bit. It’s not super-fast (CPU obviously is a bottleneck, although that is countered by the fact that the 2TB disk is connected over a USB 2.0 port, so it’s nicely balanced slow over-all =)

As you can see from the screenshot below, it currently is handling close to 1Tb of data. Ram usage right now is 106Mb, but I’ve seen it go up and down. The ‘partner’ machine that it syncs with over the internet currently is using 196Mb for essentially the same dataset.

Anyway, YMMV but I’d be careful in stating what Syncthing can and can’t handle.

Just speaking from experience. It’s possible if you have several devices requesting different parts of the index that is when the limits are hit.

This topic was automatically closed 30 days after the last reply. New replies are no longer allowed.