One thousand files per transaction, with however many blocks etc that entails.

Yeah. Honestly, I’m ok with this. It’s a one time thing per user, that box has more files than probably 99% of users and is on an 800 MHz CPU from 13 years ago. I’m not CPU-shaming you, but I think it’s expected that that scenario isn’t going to be super quick.

No problem, I’m just sharing my observations for future reference ![]() .

.

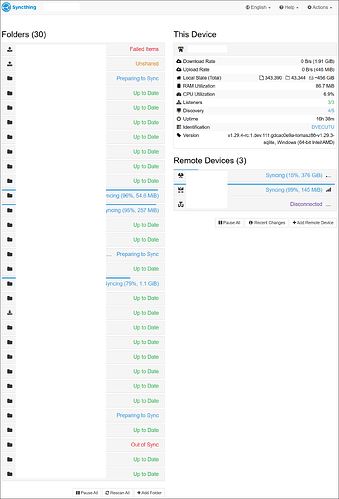

However, the number of files reported in the log has caught my attention. The log says that there were 670,781 files total, however, in reality, the local state in Syncthing reports only 343,604 files. Why are the numbers so different? Are ignored files included in the database file count?

I’ve also noticed that the index exchange phase is very, very slow. It seems much slower than when exchanging indexes after --reset-deltas. Is this normal?

Files in this case includes directories, does that make it make more sense?

Not really. There are about 43,000 directories, so even including them, the “real” number of files and directories together is still under 400,000.

Deleted files perhaps? (Not sure when these are removed from the index but entries for those do stick around a while?)

You could do a select count(*) from files where deleted=1; on the new database file actually (sqlite3 index-v2.db).

Yeah that^ probably. Cool thing with the new DB is you can indeed take a look.

I just wanted to do a quick update on the situation, because while the migration itself, according to the log, took 2 hours, Syncthing on the device in question is still working on the database and exchanging indexes with the GUI almost completely unresponsive.

The GUI only loads if left untouched for a very long time, but then, if you try to refresh it, it’s over again. The site just keeps loading and loading with no end to it. The log does get updated but it seems to happen in bursts (e.g. every 20 minutes).

I’m sure the situation will stabilise eventually, however it’s been 16 hours already and the end seems still far away, so I think this may serve as a warning for all those who sync a lot of files paired with weak hardware - the migration may take literally days.

I’ve also observed Syncthing eating up a lot of RAM. On the device in question, it stays at 7 GB at the moment. The device has only 8 GB RAM total, so it’s running on swap memory right now, probably making the whole process that much slower.

So --reset-deltas is exactly equivalent to what happens on first startup after migrations. Certainly it’s bound to be a bit slower than previously as we now do more work for each database insert, and we’re doing a lot of database inserts. If it seems pathological it might be helpful with a profile. On my (admittedly smaller) index with my (admittedly faster) Raspberry Pi, it takes a few minutes.

Profiler was enabled, so I’ve just taken cpu and heap profiles, and a full goroutine stack dump. They were taken when the GUI was completely frozen.

- syncthing-cpu-windows-amd64-v1.29.4-rc.1.dev.111.gdcac0e9a-tomasz86-v1.29.3-sqlite-085916.pprof (9.1 KB)

- syncthing-heap-windows-amd64-v1.29.4-rc.1.dev.111.gdcac0e9a-tomasz86-v1.29.3-sqlite-090009.pprof (286.8 KB)

- goroutine.txt (572.4 KB)

Is there anything useful inside them?

Yes actually, it shows that 1) the GetDeviceSequence call is slow, which I sort of suspected, and should be accelerated, and 2) you’re using weak hashing which is reading all your files which you probably don’t want (but maybe you do)…

Do you mean I should set https://docs.syncthing.net/users/config.html#config-option-folder.weakhashthresholdpct to 101? I’ve been using the default setting, which is 25.

This should speed up the MAX predicate for remote sequence lookups.

You absolutely should, unless you have data that takes advantage of the weak hashing.

Thanks, I will try to disable it then (once Syncthing stabilises, as I cannot even shut it down in this unresponsive state ![]() ).

).

Honestly, I don’t really know what kind of data would benefit from weak hashing. I sync a large variety of files, ranging from simple text files, documents, program files, pictures, videos, and also large zip archives. I don’t sync extremely large files though (like VMs that can take a few dozen GBs per file).

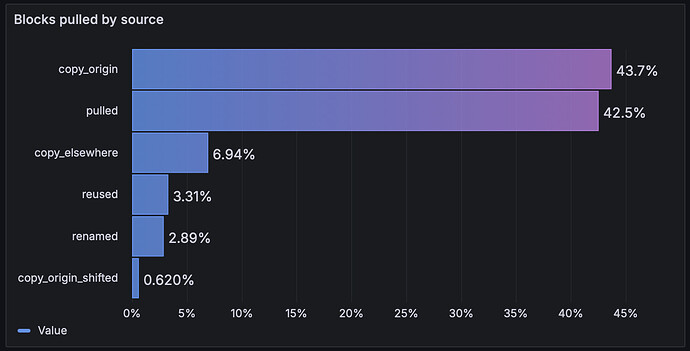

Almost none, it’s a bit of a side track, but I think that feature is on the chopping block after this initiative…

There are a few more commits on the sqlite branch which makes at least the thing in your profile faster.

I think we had transfer saved by weak hash in the old stats. The numbers were rather underwhelming, if I remember correctly…

Not pulling its own weight would be an understatement ![]()

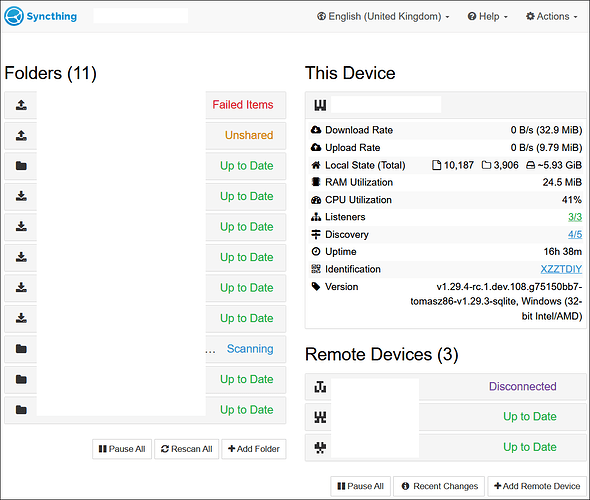

Yesterday I migrated the database on my only device running 32-bit Windows. It uses Intel Atom Z3745D paired with 2 GB of RAM. The migration seemed to have gone well with no issues, however after the fact I’ve found two panic logs related to memory.

panic-20250324-020625.log (201.1 KB)

Panic at 2025-03-24T02:06:25+01:00

fatal error: runtime: cannot allocate memory

runtime stack:

runtime.throw({0x20b5671, 0x1f})

runtime/panic.go:1101 +0x35 fp=0x3397faa0 sp=0x3397fa8c pc=0x142f0c5

runtime.persistentalloc1(0x3ffc, 0x0, 0x2b34978)

runtime/malloc.go:1957 +0x23a fp=0x3397facc sp=0x3397faa0 pc=0x13cb7fa

runtime.persistentalloc.func1()

runtime/malloc.go:1910 +0x33 fp=0x3397fae4 sp=0x3397facc pc=0x13cb5a3

runtime.persistentalloc(0x3ffc, 0x0, 0x2b34978)

runtime/malloc.go:1909 +0x41 fp=0x3397fb04 sp=0x3397fae4 pc=0x13cb561

runtime.(*fixalloc).alloc(0x2b161e0)

runtime/mfixalloc.go:90 +0x7b fp=0x3397fb20 sp=0x3397fb04 pc=0x13d24db

runtime.addfinalizer(0x64583c0, 0x22f79c4, 0x8, 0x2044000, 0x2044000)

runtime/mheap.go:1977 +0x35 fp=0x3397fb48 sp=0x3397fb20 pc=0x13e60b5

runtime.SetFinalizer.func2()

runtime/mfinal.go:534 +0x40 fp=0x3397fb64 sp=0x3397fb48 pc=0x13d22c0

runtime.systemstack(0x4000)

runtime/asm_386.s:374 +0x3d fp=0x3397fb68 sp=0x3397fb64 pc=0x143491d

It seems that the device ran out of RAM after the migration during the index exchange phase. On the one hand, this is probably understandable taking into account the hardware. On the other hand though, the device doesn’t really sync much, and the database is very small too (with the old one taking just 84 MB on the disk, and the new one taking 347 MB).

The good thing is that despite the panics, Syncthing managed to recover automatically and is still running normally at the moment.