Dude I’m already fending off what feels like hundreds of people in all the threads and commits hating every improvement I make ![]() but yeah I do believe that’s on the agenda

but yeah I do believe that’s on the agenda

It was on 1.29.4

I do have a significant number of files probably over 6M across 6 drives, some server images are up to 1.5Tb in size. The old index folder is 12Gb in size

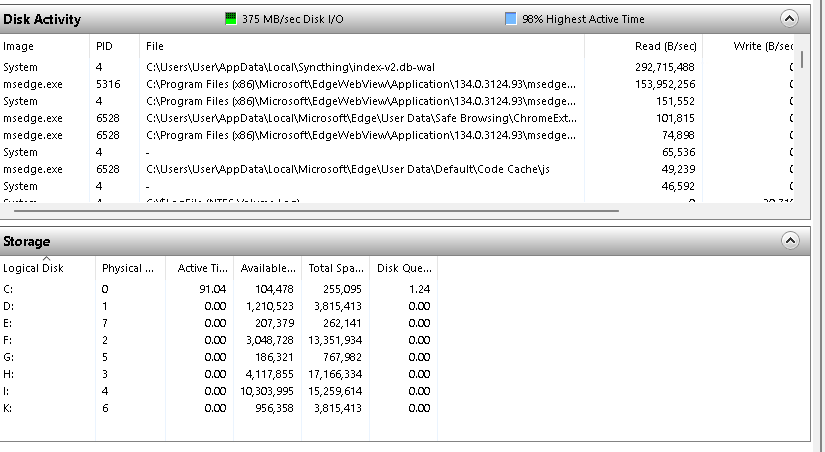

I have to say, the scanning is much faster, often maxing the IO across all the drives

Yeah those are some bigass files, that’ll do it. I have the fix committed, will be in the next update, probably tomorrow.

If the feature gets removed, maybe block the update on setups with ignoreDelete enabled? Otherwise, I’m sure we’ll see a flow of very angry users on the forum after having their files deleted, with no backup available.

This is assuming that removing ignoreDelete will cause all those deletions be synced to the device that had it enabled before.

We would definitely not roll out an update that would start surprise deleting people’s files, I promise.

I get your point. I also have to keep up with changes in various dependencies in various projects. Note that this was really not about the command-line flags that were mentioned earlier by others but more about the REST-API (as API changes probably won’t be trivial to adapt to).

My point was just that Syncthing Tray and other maintained integrations will not be magically spared from breaking in contrast to abandoned ones. It will always take effort (and time) to keep up.

If we give you a grpc contract, code will be auto-generated, and it’s just a matter old with new, better, than makes more sense.

Extensions that are maintained will not work, and I think that’s ok.

Doesn’t it?

% STTRACE=all STHOMEDIR=/tmp/foo ./bin/syncthing generate --no-default-folder

2025/04/02 23:37:37.894128 debug.go:31: DEBUG: Enabling lock logging at 100ms threshold

2025/04/02 23:37:37.895075 control_unix.go:35: DEBUG: SO_REUSEPORT supported

2025/04/02 23:37:37.895321 filesystem_copy_range.go:26: DEBUG: Registering all copyRange method

2025/04/02 23:37:37.895337 filesystem_copy_range.go:26: DEBUG: Registering standard copyRange method

2025/04/02 23:37:37.896939 logfs.go:83: DEBUG: logfs.go:83 basic /tmp/foo MkdirAll . -rwx------ <nil>

2025/04/02 23:37:37.896961 logfs.go:125: DEBUG: logfs.go:125 basic /tmp/foo Stat . {0x14000446680} <nil>

2025/04/02 23:37:37.896981 utils.go:64: INFO: Generating ECDSA key and certificate for syncthing...

2025/04/02 23:37:37.899914 generate.go:86: INFO: Device ID: AJSYRN4-5JMMYZS-LKZAUN4-IC2IIRO-QYVIALU-JNEWUEG-3MEVGTZ-DG4SXQC

2025/04/02 23:37:37.900189 utils.go:80: INFO: We will skip creation of a default folder on first start

2025/04/02 23:37:37.900389 logfs.go:95: DEBUG: logfs.go:95 basic /tmp/foo OpenFile .syncthing.tmp.439627769 2562 -rw------- &{0x14000049cf0 {0x1400007a3a8 .syncthing.tmp.439627769}} <nil>

2025/04/02 23:37:37.906293 logfs.go:71: DEBUG: logfs.go:71 basic /tmp/foo Lstat config.xml <nil> lstat /tmp/foo/config.xml: no such file or directory

2025/04/02 23:37:37.906406 logfs.go:119: DEBUG: logfs.go:119 basic /tmp/foo Rename .syncthing.tmp.439627769 config.xml <nil>

2025/04/02 23:37:37.906443 logfs.go:89: DEBUG: logfs.go:89 basic /tmp/foo Open . &{0x14000049cf0 {0x1400007a3c8 .}} <nil>

2025/04/02 23:37:37.911333 logfs.go:107: DEBUG: logfs.go:107 basic /tmp/foo Remove .syncthing.tmp.439627769 remove /tmp/foo/.syncthing.tmp.439627769: no such file or directory

syncthing debug reset-database

Ah, and also not the reset-database I think. I’ll clean that up.

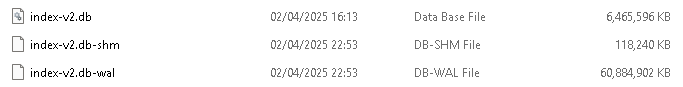

Just had a quick look to see how v2 was doing before bed and it’s used all the C drive space. Bit worried that the new database is going to be very disk space hungry…

The first clue was most of the remote devices had disconnected and virtually no logs, but one said disk is full, so expanded the vm by 100Gb, will see in the morning if it needs more.

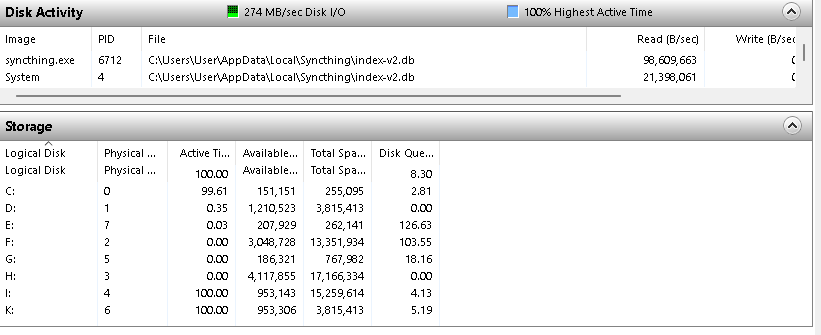

Thought I would restart St to see if the remote devices would reconnect. however it’s taking a very long time to load, specifically due to it reading the 60Gb index file

Apparently, 7 minutes…

[start] 2025/04/02 23:12:06 INFO: syncthing v2.0.0-beta.3 “Hafnium Hornet” (go1.24.1 windows-amd64) builder@github.syncthing.net 2025-04-02 10:26:24 UTC

[RTF25] 2025/04/02 23:19:30 INFO:

Yikes. That should hopefully improve with the latest checkpoint and transaction adjustments done by @calmh

The 60Gb wal file has shrunk and the index-v2 has grown to 16Gb. However whilst it’s a much faster scanner, it’s becoming too aggressive to the drives.

the disk queues are getting longer and with less throughput. St is still scanning the drives where the v1 index would have finished. I will restart St and invoke concurrency to see if that helps.

@terry this one should have hopefully a bit better WAL handling

I have tested synchronization on folder aren’t using the encrypted feature and has large files were updated frequently, incremental block synchronization is normal on the 1.29.3 official version and is fast after file was updated, but incremental block synchronization does not seem to be available on the 2.0 beta version. I wonder if the 2.0 beta has changed the incremental block synchronization feature?

Can you clarify what you mean by that and what you’re seeing? I did a few tests just now with overwriting parts in the middle of a large file and got just the changed pieces transferred, which is what I expect.

With the newest beta, I’m seeing the following when trying to --reset-deltas from the command line.

syncthing.exe: error: unknown flag --reset-deltas

Hm that’s related to a change of mine (that also made it in v1). It appears the default type is not ‘basic’ as it should be, but rather the empty string, and that doesn’t translate to ‘basic’ anymore. Is this something you think happens in Syncthing-Fork or rather in Syncthings config handling?

The Folder Path completion seems to be broken…

That is not supposed to be a change in how the config or API works. @pixelspark can we make sure this is not breaking? If we allowed an empty filesystem type previously (which I considered but the code diff looked like we didn’t…), then we should probably continue doing so.