Hello:

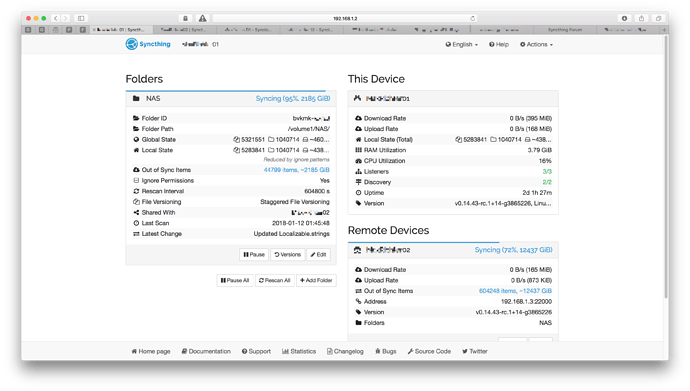

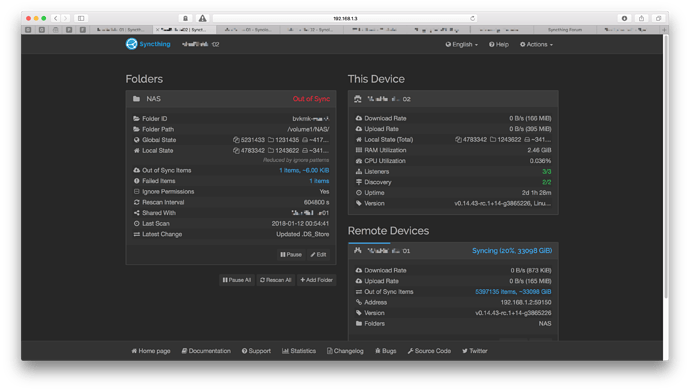

Ok - it looked like the scanner completed - but for some reason it’s thinking it’s only 1 file behind. I know this isn’t true - I can see some top-level folders (with many GB of data) in the shared folder in NAS01 which haven’t replicated to NAS02.

Here’s what the log revealed:

[OVPYX] 2018/01/15 23:45:12.811214 folder.go:123: INFO: Completed initial scan of readwrite folder "NAS" (bvkmk-*****)

[OVPYX] 2018/01/15 23:45:12.811293 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/15 23:45:12.811535 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

[OVPYX] 2018/01/15 23:45:46.835518 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:45:47.864196 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:45:47.864364 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:46:12.666126 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:46:12.767074 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:46:36.697754 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:46:36.801728 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:46:36.801818 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 1m0s.

[OVPYX] 2018/01/15 23:46:36.802155 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:80, GlobalID:80, Time:time.Time{wall:0xbe8f6d372fc8d81f, ext:22895691818283, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}, "folder":"bvkmk-*****"}}

[OVPYX] 2018/01/15 23:46:36.802218 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/15 23:46:36.802243 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

[OVPYX] 2018/01/15 23:47:01.153150 verboseservice.go:48: VERBOSE: Summary for folder "bvkmk-*****" is map[localFiles:4783351 globalDirectories:1231435 localBytes:36687065420323 globalBytes:44808573788174 needBytes:6148 needDirectories:0 localDirectories:1243622 inSyncBytes:44808573782026 state:syncing needDeletes:0 globalDeleted:433070 needFiles:1 globalFiles:5231442 sequence:16931096 inSyncFiles:5231441 localDeleted:373986 localSymlinks:14048 version:16931096 globalSymlinks:14048 needSymlinks:0]

[OVPYX] 2018/01/15 23:47:06.569122 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:47:06.669925 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:47:06.670424 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:47:32.868678 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:47:33.040854 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:48:00.039678 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:48:00.222843 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:48:00.222949 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:90, GlobalID:90, Time:time.Time{wall:0xbe8f6d4c0d4829c9, ext:22979112959711, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"folder":"bvkmk-*****", "errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}}}

[OVPYX] 2018/01/15 23:48:00.222976 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 1m0s.

[OVPYX] 2018/01/15 23:48:00.223029 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/15 23:48:00.223177 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

[OVPYX] 2018/01/15 23:48:25.585517 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:48:25.740217 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:48:25.740320 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:48:51.692604 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:48:51.937545 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:49:18.996140 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:49:19.131341 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:49:19.131464 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:99, GlobalID:99, Time:time.Time{wall:0xbe8f6d5fc7d3e4b3, ext:23058021453769, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"folder":"bvkmk-*****", "errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}}}

[OVPYX] 2018/01/15 23:49:19.131493 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 1m0s.

[OVPYX] 2018/01/15 23:49:19.131619 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/15 23:49:19.131733 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

[OVPYX] 2018/01/15 23:49:45.235340 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:49:45.405802 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:49:45.406144 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:50:12.504596 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:50:12.677974 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:50:38.830219 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:50:38.941417 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:50:38.941532 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:108, GlobalID:108, Time:time.Time{wall:0xbe8f6d73b81ca743, ext:23137831528547, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}, "folder":"bvkmk-*****"}}

[OVPYX] 2018/01/15 23:50:38.941737 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 2m0s.

[OVPYX] 2018/01/15 23:50:38.941855 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/15 23:50:38.943439 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

[OVPYX] 2018/01/15 23:51:04.295652 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:51:04.577233 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:51:04.577578 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:51:30.835442 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:51:31.017902 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:51:56.507589 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:51:56.612000 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:51:56.612096 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:117, GlobalID:117, Time:time.Time{wall:0xbe8f6d87247a26fc, ext:23215502111772, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"folder":"bvkmk-*****", "errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}}}

[OVPYX] 2018/01/15 23:51:56.612252 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 4m0s.

[OVPYX] 2018/01/15 23:51:56.612435 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/15 23:55:20.150882 verboseservice.go:48: VERBOSE: Completion for folder "bvkmk-*****" on device GSXHK4J-*******-*******-*******-*******-*******-*******-******* is 21.73096117185034%

[OVPYX] 2018/01/15 23:55:56.612710 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

[OVPYX] 2018/01/15 23:56:21.378949 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:56:21.487711 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:56:21.487845 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:56:45.435036 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:56:45.542141 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:57:09.497611 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/15 23:57:09.726808 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 8m0s.

[OVPYX] 2018/01/15 23:57:09.726883 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/15 23:57:09.726986 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:127, GlobalID:127, Time:time.Time{wall:0xbe8f6dd56b51db87, ext:23528616911537, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"folder":"bvkmk-*****", "errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}}}

[OVPYX] 2018/01/15 23:57:09.727014 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/15 23:57:33.427328 verboseservice.go:48: VERBOSE: Summary for folder "bvkmk-*****" is map[inSyncFiles:5231441 inSyncBytes:44808573782026 globalDeleted:433070 localSymlinks:14048 needSymlinks:0 needFiles:1 localFiles:4783351 state:idle localBytes:36687065420323 needBytes:6148 globalDirectories:1231435 globalBytes:44808573788174 version:16931096 sequence:16931096 needDirectories:0 needDeletes:0 globalSymlinks:14048 localDeleted:373986 globalFiles:5231442 localDirectories:1243622]

[OVPYX] 2018/01/15 23:57:40.929135 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:23215, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANIPConn1/urn:schemas-upnp-org:service:WANIPConnection:1/http://192.168.1.254:52826/ctl/IPConn is no longer available.

[OVPYX] 2018/01/15 23:57:40.929198 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:38501 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/15 23:57:41.528901 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:41172, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANPPPConn1/urn:schemas-upnp-org:service:WANPPPConnection:1/http://192.168.1.254:52826/ctl/PPPConn is no longer available.

[OVPYX] 2018/01/15 23:57:41.528962 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:44675 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/15 23:57:51.537456 verboseservice.go:48: VERBOSE: Listen address tcp://0.0.0.0:22000 resolution has changed: lan addresses: [tcp://0.0.0.0:22000] wan addresses: [tcp://0.0.0.0:22000 tcp://0.0.0.0:38501 tcp://0.0.0.0:38501 tcp://0.0.0.0:44675 tcp://0.0.0.0:44675]

[OVPYX] 2018/01/16 00:04:40.706351 verboseservice.go:48: VERBOSE: Completion for folder "bvkmk-*****" on device GSXHK4J-*******-*******-*******-*******-*******-*******-******* is 21.73096117185034%

[OVPYX] 2018/01/16 00:05:09.727246 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

...

[OVPYX] 2018/01/16 06:22:03.239678 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/16 06:22:03.760696 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 06:22:03.760916 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 06:22:27.421125 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/16 06:22:27.520540 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 06:22:51.168289 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/16 06:22:51.280270 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 1h4m0s.

[OVPYX] 2018/01/16 06:22:51.280325 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 06:22:51.280601 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:228, GlobalID:228, Time:time.Time{wall:0xbe8f846ed0b443e6, ext:46670170375942, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"folder":"bvkmk-*****", "errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}}}

[OVPYX] 2018/01/16 06:22:51.280639 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/16 06:23:14.600525 verboseservice.go:48: VERBOSE: Summary for folder "bvkmk-*****" is map[globalSymlinks:14048 globalFiles:5231442 globalDirectories:1231435 globalDeleted:433070 needDeletes:0 localDirectories:1243622 needSymlinks:0 localDeleted:373986 localSymlinks:14048 inSyncBytes:44808573782026 localBytes:36687065420323 state:idle needFiles:1 needBytes:6148 version:16931096 sequence:16931096 globalBytes:44808573788174 localFiles:4783351 inSyncFiles:5231441 needDirectories:0]

[OVPYX] 2018/01/16 06:29:46.315631 verboseservice.go:48: VERBOSE: Completion for folder "bvkmk-*****" on device GSXHK4J-*******-*******-*******-*******-*******-*******-******* is 21.73096117185034%

[OVPYX] 2018/01/16 06:29:50.738714 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:44675, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANPPPConn1/urn:schemas-upnp-org:service:WANPPPConnection:1/http://192.168.1.254:52826/ctl/PPPConn is no longer available.

[OVPYX] 2018/01/16 06:29:50.738766 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:41172 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/16 06:29:51.082722 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:38501, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANIPConn1/urn:schemas-upnp-org:service:WANIPConnection:1/http://192.168.1.254:52826/ctl/IPConn is no longer available.

[OVPYX] 2018/01/16 06:29:51.082771 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:23215 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/16 06:30:01.094422 verboseservice.go:48: VERBOSE: Listen address tcp://0.0.0.0:22000 resolution has changed: lan addresses: [tcp://0.0.0.0:22000] wan addresses: [tcp://0.0.0.0:22000 tcp://0.0.0.0:23215 tcp://0.0.0.0:23215 tcp://0.0.0.0:41172 tcp://0.0.0.0:41172]

[OVPYX] 2018/01/16 07:00:01.002261 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:23215, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANIPConn1/urn:schemas-upnp-org:service:WANIPConnection:1/http://192.168.1.254:52826/ctl/IPConn is no longer available.

[OVPYX] 2018/01/16 07:00:01.002322 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:38501 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/16 07:00:01.593645 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:41172, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANPPPConn1/urn:schemas-upnp-org:service:WANPPPConnection:1/http://192.168.1.254:52826/ctl/PPPConn is no longer available.

[OVPYX] 2018/01/16 07:00:01.593694 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:44675 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/16 07:00:11.602024 verboseservice.go:48: VERBOSE: Listen address tcp://0.0.0.0:22000 resolution has changed: lan addresses: [tcp://0.0.0.0:22000] wan addresses: [tcp://0.0.0.0:22000 tcp://0.0.0.0:38501 tcp://0.0.0.0:38501 tcp://0.0.0.0:44675 tcp://0.0.0.0:44675]

[OVPYX] 2018/01/16 07:26:51.280793 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now syncing

[OVPYX] 2018/01/16 07:27:15.236716 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/16 07:27:15.817436 rwfolder.go:1748: INFO: Puller (folder "NAS" (bvkmk-*****), file "file/path/1/.DS_Store"): finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 07:27:15.817629 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 07:27:39.477422 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/16 07:27:39.573854 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 07:28:03.215867 verboseservice.go:48: VERBOSE: Started syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file)

[OVPYX] 2018/01/16 07:28:03.333601 verboseservice.go:48: VERBOSE: Finished syncing "bvkmk-*****" / "file/path/1/.DS_Store" (update file): pull: peers who had this file went away, or the file has changed while syncing. will retry later

[OVPYX] 2018/01/16 07:28:03.333879 rwfolder.go:294: INFO: Folder "NAS" (bvkmk-*****) isn't making progress. Pausing puller for 1h4m0s.

[OVPYX] 2018/01/16 07:28:03.334006 verboseservice.go:48: VERBOSE: FolderErrors events.Event{SubscriptionID:241, GlobalID:241, Time:time.Time{wall:0xbe8f8840d3e5ef33, ext:50582223962667, loc:(*time.Location)(0x119f800)}, Type:2097152, Data:map[string]interface {}{"folder":"bvkmk-*****", "errors":[]model.fileError{model.fileError{Path:"file/path/1/.DS_Store", Err:"finisher: pull: peers who had this file went away, or the file has changed while syncing. will retry later"}}}}

[OVPYX] 2018/01/16 07:28:03.334037 verboseservice.go:48: VERBOSE: Folder "bvkmk-*****" is now idle

[OVPYX] 2018/01/16 07:28:26.668128 verboseservice.go:48: VERBOSE: Summary for folder "bvkmk-*****" is map[globalSymlinks:14048 inSyncBytes:44808573782026 version:16931096 localBytes:36687065420323 needDirectories:0 needDeletes:0 globalDirectories:1231435 needSymlinks:0 localDeleted:373986 localSymlinks:14048 sequence:16931096 localFiles:4783351 localDirectories:1243622 needFiles:1 inSyncFiles:5231441 state:idle globalDeleted:433070 needBytes:6148 globalFiles:5231442 globalBytes:44808573788174]

[OVPYX] 2018/01/16 07:30:11.108626 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:38501, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANIPConn1/urn:schemas-upnp-org:service:WANIPConnection:1/http://192.168.1.254:52826/ctl/IPConn is no longer available.

[OVPYX] 2018/01/16 07:30:11.108683 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:23215 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/16 07:30:11.455273 structs.go:42: INFO: Removing NAT port mapping: external TCP address 12.34.56.78:44675, NAT UPnP_BTHomeHub5.0B-1_5CDC965E12BF/WANDevice/urn:upnp-org:serviceId:WANPPPConn1/urn:schemas-upnp-org:service:WANPPPConnection:1/http://192.168.1.254:52826/ctl/PPPConn is no longer available.

[OVPYX] 2018/01/16 07:30:11.455733 structs.go:32: INFO: New NAT port mapping: external TCP address 12.34.56.78:41172 to local address 0.0.0.0:22000.

[OVPYX] 2018/01/16 07:30:21.464456 verboseservice.go:48: VERBOSE: Listen address tcp://0.0.0.0:22000 resolution has changed: lan addresses: [tcp://0.0.0.0:22000] wan addresses: [tcp://0.0.0.0:22000 tcp://0.0.0.0:23215 tcp://0.0.0.0:23215 tcp://0.0.0.0:41172 tcp://0.0.0.0:41172]

[OVPYX] 2018/01/16 07:35:01.714054 verboseservice.go:48: VERBOSE: Completion for folder "bvkmk-*****" on device GSXHK4J-*******-*******-*******-*******-*******-*******-******* is 21.73096117185034%

Does this suggest that NAS02 isn’t receiving index updates from NAS01? Any thoughts about where to go digging next?..

Many thanks,

Pants.