I know there is a FileModifyDate issue with non-rooted android below 9, so I made a one-way sync folder for smartphone photos/vids on my andoid 8 tablet. Yet I experience something ill. Maybe 1 out of 10 or 20 media files is only copied partially to the tablet when both syncthing instances (v1.8) state the folder ‘Up to Date’. First I assumed that only the thumbails might have been incomplete when the tablet media parser made the gallery too soon. But on one hand, I think destination copies should only get the final name after the content is fully transferred, and on the other hand it is not only a thumbnail issue, but the file body itself is also affected. I assume that log files and other additional data might be needed to better understand what happens, so I kindly ask for any guidance what I should supply for that reason, to prepare another sync run accordingly.

I don’t think that’s possible, because we only sync whole files or nothing. The application that runs on the mobile is the same as it runs on the desktops, and if we’ve corrupted files we’d known by now.

I am not sure what advice to give you. I don’t think any logs will help identify what corrupts the files, as I doubt it’s us.

We might be obstructing some process that post-processes images or something.

I think that’s an issue reported every now and then on android: Some versions/apps/? pre-allocates the entire file, but writes only partially, which prompts the filesystem watcher such that we pick up the partial file. Then later it finishes writing to the file without changing modtime or size, so we never see that change. That’s only relevant if the second write is very late, as there’s a delay between filesystem event and actual scanning on Syncthing’s side (10s). I remember this happening in the context of cameras I think. Anyway not much we can do about it, you can touch the file on the android (probably only possible using adb?) if that’s the issue.

Thank you for the support! The media files were created during the day, while the sync was performed hours later in the evening. If I understand the explanation right, it describes things on the source device, which can hardly be the cause here. I suggest I fully exit syncthing on the source device during the day and start it just for the sync session. Agree? I can also do a simultaneous sync to two targets. If the cause is on the source device, the same files must be bad in the same way on both targets, right? What worries me on top, is that triggering manual scan showed no effect i. e. the devices still showed ‘Up to Date’ although definitely the databases must have contained different hashes - or the target device database does not reflect the file content properly?

Yes, what I describe concerns the “source device”. In that scenario the hashes in the Syncthing db are not the same as what you’d get when hashing the files on disk. That’s because Syncthing hashed the files before they were changed to their final state on disk. Thus both devices show up-to-date, because both devices have the intermediate state. And that’s the only state Syncthing knows about. There’s nothing Syncthing can do, because it can’t detect the change on disk as the size and modtime didn’t change (rehashing everything on every scan is not practicable).

Whether this is the actual problem you observe I cannot say. What you could do to check is to hash the file on the source and destination yourself to see if they differ.

That’s consistent with my theory. If you can bump the modification time on the original file it will sync the final, working file. As to how to prevent the problem: You could increase the fsWatcherDelayS option (advanced), however noone knows to what (at least I doubt anyone knows why and for how long android/the app/… delays writing the actual content).

Just reading this and curious: Does Syncthing have a protection against writing pulled file content, fs watcher is being triggered by the os and picking up it’s own intermediate change? Is it exlcuding its own .syncthing.tmp files from the file watching?

Well, I’m also interested if touching the file on the source side, e.g. on terminal or ADB shell, helps the sync process.

Syncthing doesn’t scan it’s own temp files. The watcher will be triggered, because it’s triggered on anything, but if it’s a temp file nothing is done.

OK I’ll try 3600s delay on the source device tomorrow with new files.

(.stignore is

.nomedia

.stfolder

.stignore

.stversions

(?d)*Cache*

(?d)cache

(?d)*sync-conflict-*

(?d)*.log

(?d)*.tmp*

(?i)!*Templ*

(?d)(?i)*Temp*

(?i)recycle*

(?d).thumb*)

imsodin, thank you again that you give answers to my questions.

I did a sync now to the larger group of devices at home, which also includes 3 Windows 10 devices.

Again, with media from e.g. Friday, I have some broken copies.

E.g. with an image IMG_20200828_155956.jpg, I find just the top 33% on the Android 8 tablet, and on the tablet it has the date of today 8:31 PM. However, this very file is good on 3 Windows computers, where it shows creation dates of today 6:31 PM, 7:40 PM, and 9:17 PM (checked SHA1).

I find no explanation how the source device could store a bad copy of the image for 49.5 hours to send it to the Android target, and send good copies before and after that to Windows targets.

Rather I like to think that the damage occurs on the Android target. Maybe because the device is unable to retain the original modification date, ST stops syncing from the source. But very sorry to say, I’m kinda stuck in my imagination that ST should first create a ‘~syncthing~NAME.EXT.tmp’ file, next fully copy the binary content, and finally rename it to NAME.EXT, whatever the modification date of the intermediate ~syncthing~NAME.EXT.tmp file is (because this file clearly is not supposed to be another original on the target device).

I hope that my narrow-minded-ness does not bother you nor anyone. If it is helpful, I can manage to add another Android 9 device for testing. Besides of that, I still have the same question as in the OP - can I assist somehow to trace down what really happens, so it can be solved?

Thanks for the followup. Just let me recap so you can correct in case I got something wrong:

The source Android device and all windows devices have the same data on disk, only the android device has a different, broken file (checked through hashing the files on disk). And on all devices including the target one the Syncthing folders have status up-to-date (also check web UI on the android app just in case).

Then the scenario I described indeed does not apply. I don’t have any concrete ideas to explain this behaviour, so my next step would be querying the rest api for info on that file (https://docs.syncthing.net/rest/db-file-get.html). However that might be hard (impossible) on android. Or capture logs when it happens with STTRACE=db,model debug facilities enabled, however you can’t reproduce this reliably, right?

Hi,

Just a note: I’d suggest opening the web UI for remote access on the Android temporarily. You’d then be able to query the rest api conveniently via powershell/curl.

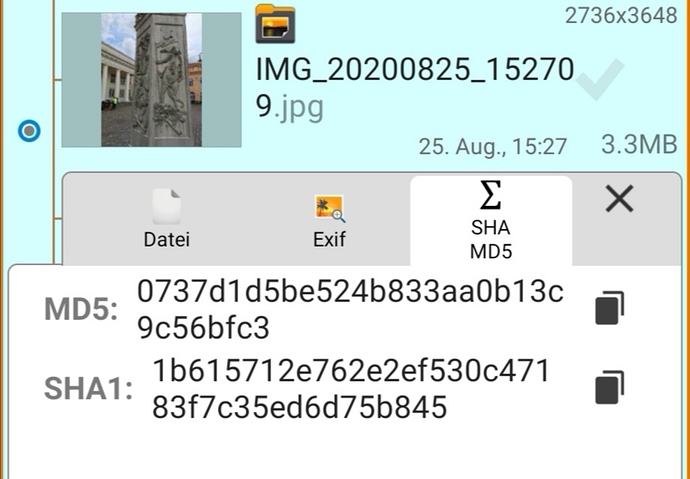

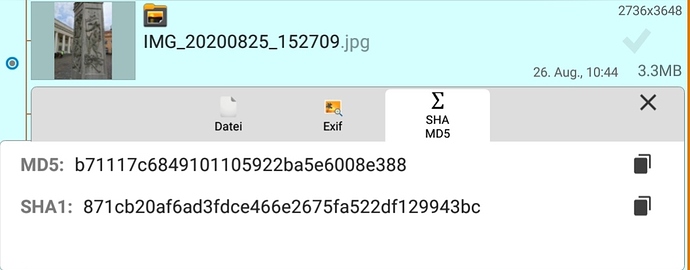

![]()

I’m not perfectly sure I messed it up with the above example

(phone wants to update and warns about data loss, so I moved the files to other places on the Windows devices yesterday without thinking. The Android’s ST apps were stopped then. Today I paused the folder there first, because otherwise ST would remove the file. But curl read “No such object in the index”, so I made the folder non-shared and restarted ST)

good file on source device:

{ "availability": null, "global": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-08-28T13:59:58.3Z", "modifiedBy": "PYKRH4E", "mustRescan": false, "name": "Camera/IMG_20200828_155956.jpg", "noPermissions": true, "numBlocks": 37, "sequence": 738, "size": 4809971, "type": "FILE", "version": [ "PYKRH4E:1598804879" ] }, "local": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-08-28T13:59:58.3Z", "modifiedBy": "PYKRH4E", "mustRescan": false, "name": "Camera/IMG_20200828_155956.jpg", "noPermissions": true, "numBlocks": 37, "sequence": 738, "size": 4809971, "type": "FILE", "version": [ "PYKRH4E:1598804879" ] } }

bad file on target device:

{ "availability": [ { "id": "SVL32QD-xxxxxxx-xxxxxxx-xxxxxxx-xxxxxxx-xxxxxxx-xxxxxxx-xxxxxxx", "fromTemporary": false } ], "global": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-08-28T15:59:58.3+02:00", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "Camera\\IMG_20200828_155956.jpg", "noPermissions": false, "numBlocks": 37, "permissions": "0644", "sequence": 3959, "size": 4809971, "type": "FILE", "version": [ "PYKRH4E:1598804879", "64I6IGZ:1598973506" ] }, "local": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-08-28T15:59:58.3+02:00", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "Camera\\IMG_20200828_155956.jpg", "noPermissions": false, "numBlocks": 37, "permissions": "0644", "sequence": 3959, "size": 4809971, "type": "FILE", "version": [ "PYKRH4E:1598804879", "64I6IGZ:1598973506" ] } }

(I wasn’t aware that I once checked “ignore permissions” on the source device ![]() )

)

PYRKRH4E are the first letters of the source device ID, 64I6IGZ and SVL32QD of Windows devices.

The device 64I6IGZ has modified the file, as evident from the more recent mod. time and modified by fields. The target device has that “changed” file (looks like only modtime has changed, size is the same), the source device doesn’t. Are they connected?

64I6IGZ is my main desktop PC, which probably received this file and forwarded the information that it has it first. As I explained, I struggled with keeping the experiment clean here, because I also wanted to avoid data loss due to the pending phone update. The file is not modified content-wise on the PC, take that for granted please. As a lesson from the theories, complications and ambiguities so far, I’ll set up a new sync folder just for the experiment, that does only sync from the desktop PC (Windows 10 x64, Core i5 CPU) to the suspect Android 8 device, and copy photos there until the effect happens. Then I’ll post the REST data.

Results for this run are: 22 of 108 files damaged, one of them is ‘20200902_133101.jpg’.

/rest/db/file of good source file on 64I6IGZ…

{ "availability": [ { "id": "CTXVC6O-...", "fromTemporary": false } ], "global": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T13:31:01+02:00", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_133101.jpg", "noPermissions": false, "numBlocks": 28, "permissions": "0644", "sequence": 111, "size": 3611421, "type": "FILE", "version": [ "64I6IGZ:1599157040" ] }, "local": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T13:31:01+02:00", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_133101.jpg", "noPermissions": false, "numBlocks": 28, "permissions": "0644", "sequence": 111, "size": 3611421, "type": "FILE", "version": [ "64I6IGZ:1599157040" ] } }

/rest/db/file of damaged target file on CTXVC60…

{ "availability": [ { "id": "64I6IGZ-...", "fromTemporary": false } ], "global": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T11:31:01Z", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_133101.jpg", "noPermissions": false, "numBlocks": 28, "permissions": "0644", "sequence": 111, "size": 3611421, "type": "FILE", "version": [ "64I6IGZ:1599157040" ] }, "local": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T11:31:01Z", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_133101.jpg", "noPermissions": false, "numBlocks": 28, "permissions": "0644", "sequence": 111, "size": 3611421, "type": "FILE", "version": [ "64I6IGZ:1599157040" ] } }

I’ll wipe everything, prepare STTRACE=db,model and run again

For the 2nd run, I paused all other devices and folders, to keep the log file clear. Only four (different) of the 108 files were damaged this time. Normally, the desktop PC shares 16 folders with 5 devices (about 440,000+ files), and the Android tablet shares 14 folders with 4 devices (maybe 4,700 files), this time one folder containing 108 files with one device.

The /REST data are as follows

PC:

{ "availability": [ { "id": "CTXVC6O-...", "fromTemporary": false } ], "global": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T15:29:48+02:00", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_152948.jpg", "noPermissions": false, "numBlocks": 71, "permissions": "0644", "sequence": 196, "size": 9195424, "type": "FILE", "version": [ "64I6IGZ:1599159262" ] }, "local": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T15:29:48+02:00", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_152948.jpg", "noPermissions": false, "numBlocks": 71, "permissions": "0644", "sequence": 196, "size": 9195424, "type": "FILE", "version": [ "64I6IGZ:1599159262" ] } }

Android tablet, damaged:

{ "availability": [ { "id": "64I6IGZ-...", "fromTemporary": false } ], "global": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T13:29:48Z", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_152948.jpg", "noPermissions": false, "numBlocks": 71, "permissions": "0644", "sequence": 61, "size": 9195424, "type": "FILE", "version": [ "64I6IGZ:1599159262" ] }, "local": { "deleted": false, "ignored": false, "invalid": false, "localFlags": 0, "modified": "2020-09-02T13:29:48Z", "modifiedBy": "64I6IGZ", "mustRescan": false, "name": "20200902_152948.jpg", "noPermissions": false, "numBlocks": 71, "permissions": "0644", "sequence": 61, "size": 9195424, "type": "FILE", "version": [ "64I6IGZ:1599159262" ] } }

The PC’s log file was empty ![]() . I stored the tablet’s log file; please ask for what you’d like to see from it.

. I stored the tablet’s log file; please ask for what you’d like to see from it.

(This file is largely OK. The test pictures were rotated between runs to make double sure the new data were not already somewhere on the target)

SOLVED

Today I made more checks and found out it was probably an incompatibility issue between the tablet and the micro SD card: Several runs with internal storage and a different card as the targets finished without a single corrupted transfer. Really sorry for any concerns and loss of time I have caused <3

This topic was automatically closed 30 days after the last reply. New replies are no longer allowed.