Syncthing uses a database to store index information and other things. This is pretty essential to how it works. Currently we’re using a LevelDB implementation, and it’s served us well but we’re seeing a lot of unexplained crashes and other worrying things. For this reason we’ve looked at alternatives. Badger is one alternative and we’ve implemented this as a database backend. However it’s a fundamental change that requires careful testing before any kind of roll out.

This is where you can help. As of Syncthing 1.7.0 it’s easy to switch between the LevelDB and Badger database backends, without having to reindex or reinstall.

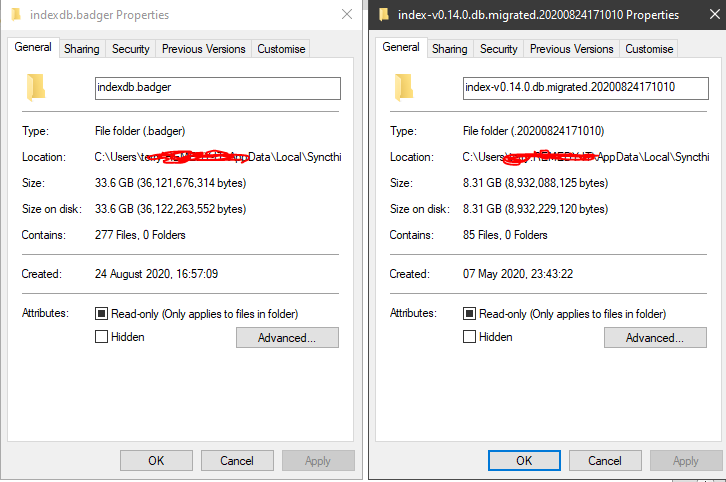

There are usage details on the docs site, but the short of it is that if you start with the USE_BADGER environment variable set the database will be converted and you’ll use Badger. If you restart without the environment variable the conversion will be done in the other direction and you’ll use LevelDB as always.

We’d love to hear stories of success, but horror stories (should they occur) are the essential ones. Back up your data.

(For what it’s worth I’ve run all my machines on Badger for the last month without ill effects.)

Later edit

Don’t test this on Windows, as there are issues. (See below)

Don’t test this on Windows, as there are issues. (See below)

What OS was this on? Looks like this is

What OS was this on? Looks like this is