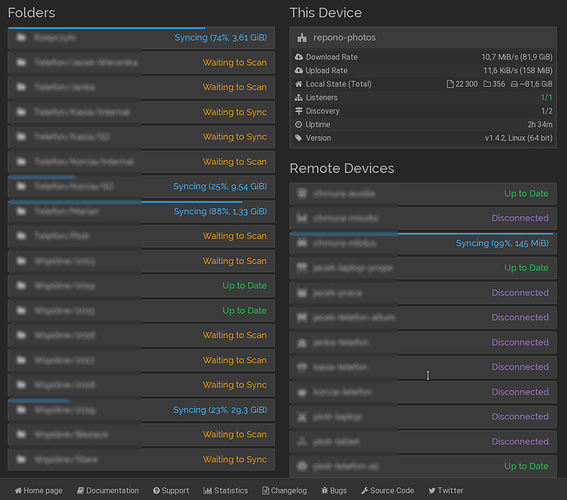

Hi, I tried to recreate my syncthing node (about 250 GB of photos) and I have insane load average: 996,77, 969,46, 881,29. I think something is not going right.

I think so too. But from the given info, who knows what.

What I should post config.xml, logs?

top and GUI screenshots is where I would start

top with threads: tmux-screen-capture-syncthing-2-0-20200416T112132.log (13.0 KB)

This all looks normal and reasonable to me, apart from the load average number. I would suspect that the user space ntfs driver has some part in that, but it’s not something I’ve tried myself. To some extent it’s just a number, though.

It looks like each of the hundreds of threads is trying to read from disk and this causes such a high load average

Are there hundreds of threads? There’s a few tens in the output you posted, which makes total sense with the screenshot. It’s better if you actually post the information you are seeing yourself rather than something else…

NTFS on Linux, or macOS for that matter, has always given me headache. I bet you’d have the same kind of experience if you tried to just read/write a hundred files at the same time.

ps huH p $PID

ps_huH.log (94.2 KB) - 1048 threads

Yeah that’s a lot of threads. Did you tweak any advanced settings around copiers, max pending puller kilobytes, stuff like that?

yeah I use ntfs (on veracrypt) because I want to have it portable and it is the only solution which I found

I didn’t change anything

copiers = 0 and Puller Max Pending Ki B = 0 for every folder

Actually, I think there may be an issue in the general case (with default settings) if your network is faster than your storage and responses come in faster than we can write them to disk. That could be what you’re suffering from here, what with the ntfs and the crypto and stuff, especially if those are also rotating disks and you’re on a local or fast network…

We’ll ask for more data from the network as soon as we get a response to a previous request, and assume that we’ll have time to actually write that data to disk. If we don’t, we’ll end up accumulating I/O bound threads.

I’m running second instance of syncthing on my computer on usb disk

Okay. I’m not sure why you’d do that, but least it means you probably have a fast network in this case, yeah. Regardless, this is something we should fix.

is there some way to limit total number of threads or can I limit it with some other tool (systemd, docker)?

I don’t think so. But, really, if these are local directories on both sides: rsync

I wanted to have a backup (portable) of my photos and the simplest solution seemed to be to use syncthing

Edit (I cannot Reply - limit for new user): with syncthing I have better control what I want to have on my disk and it can download from various devices