Scanning is finished?

Scanning is finished. What worries me is that the global state on the client is much lower than the global/local state on the master.

From the example above you showed, it seems the file is now in sync (from your master slave print out you provided) and the side that was downloading stopped as the file changed underneath its feet.

I suggest you pick a large file that is actively being downloaded, and provide the same info.

There is also an override button visible, implying that the other side had (or now has) the file with different content.

I feel that you ran rsync or something like that half way through the scan (or post scan) which lead to this.

Also, if you ran rsync why aren’t all files in sync?

As I said we did not rsync the complete folder due to time issues and we were hoping that Syncthing would complete it.

After we experienced above errors we completely removed Syncthing from the client, re-installed it and re-hashed everything. After the initial hash we gave it 48 hours to make up its mind connecting to the master.

In those 48 hours it was re-downloading existing files as shown above (path, times, checksum identical). Only then we additionally deployed rsync again because we cannot wait for Syncthing to re-download 7 TB of data before it will actually begin on the missing 2 TB of data. But why should that actually matter? If rsync transfers a file then Syncthing will scan this file, hash it and compare it to the master server and surprise, it will be there and identical. So, no transfer needed.

What I don’t understand is that Syncthing on the client never reported a correct global state (always around 8 TB instead of nearly 10TB), that it re-downloads files that exist in both location and are identical. If Syncthing is good at one thing, then this, no?

I suggest you remove or unshare the folders from each side, wait for the folders to show up as unshared or along those lines and see what global state it reports on both sides to validate that syncthing can actually read all 10TB of data.

I’ve also explain in the first two lines of my previous post what I’d like to see to further debug this.

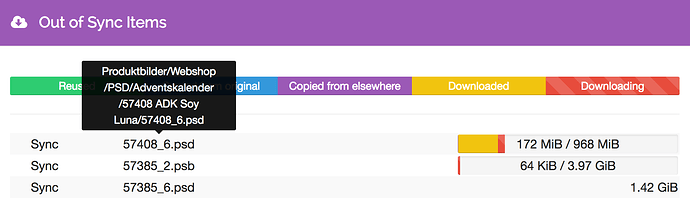

Sorry, had to wait for a good example:

MASTER:

# ls -l

-rwxrwxrwx+ 1 sadmin users 1015181729 Sep 26 2017 Produktbilder/Webshop/PSD/Adventskalender/57408 ADK Soy Luna/57408_6.psd

# openssl md5

MD5(Produktbilder/Webshop/PSD/Adventskalender/57408 ADK Soy Luna/57408_6.psd)= 888565db24dff24425643c47408969a9

CLIENT:

# ls -l

-rwxrwxrwx+ 1 sadmin users 1015181729 Sep 26 2017 Produktbilder/Webshop/PSD/Adventskalender/57408 ADK Soy Luna/57408_6.psd

# openssl md5

MD5(Produktbilder/Webshop/PSD/Adventskalender/57408 ADK Soy Luna/57408_6.psd)= 888565db24dff24425643c47408969a9

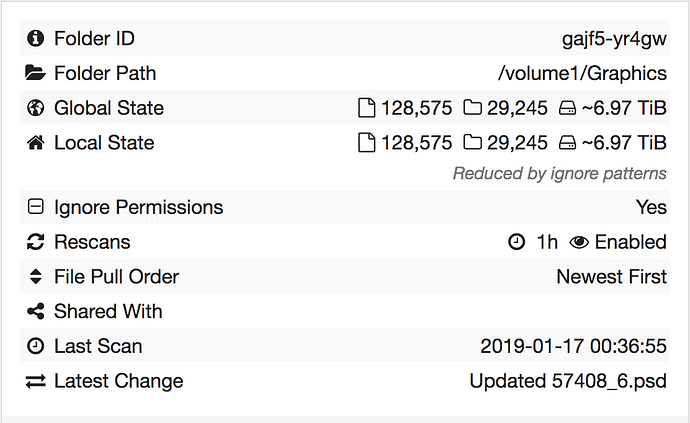

CLIENT SCREENSHOT:

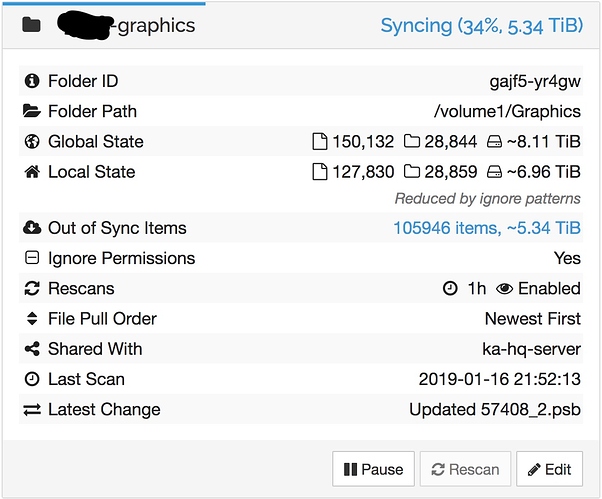

CLIENT FOLDER:

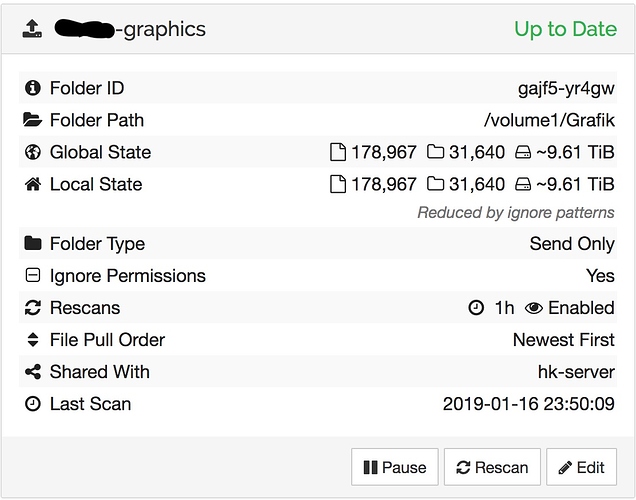

SERVER FOLDER:

If I unshare it will just show 127.830 on the client and 178.967 files as global state on the master, no?

I don’t thing the 10TB is the problem. I have an installation with 20 and it works just fine.

Well syncthing clearly hasn’t scanned/found a good chunk of your files for some reason, hence is redownloading them. I suggest you shut down, remove the remote device, rescan, and see if local state starts matching global state. If it’s not, we should look into why, as that is the cause of the redownloads.

Will do.

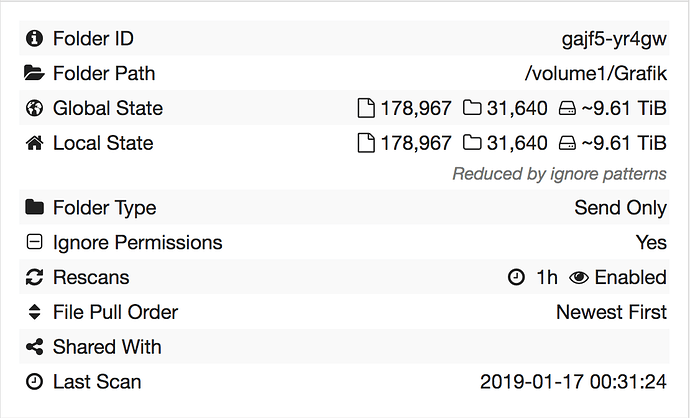

Result as expected:

MASTER:

CLIENT:

Also find reports more or less the same (I was lazy on the exclusions):

# dirs

29254

# files

128628

Puh…

So you actually have less data than you thought is what you are saying

No, this is expected:

- We transferred around 6.5 TB via rsync (I don’t know the 100% exact numbers)

- We hoped that Syncthing would pull the remaining 3 TB in the order (newest to oldest)

What we are seeing is:

- Syncthing does as expected for about 15 to 30 minutes (pull the latest files, partly re-using existing blocks etc.) after restarting Syncthing

- After the initial phase Syncthing starts pulling files that are already present on master and client and are identical

- On the client Syncthing never reports the correct global state

Possible explanation that I can come up with:

- The hashes don’t match on older files, therefore Syncthing re-downloads them

- Both master and client have enabled large blocks however, master from pre-v1 and client from post-v1. Maybe large blocks on the master were enabled at a later stage (can’t remember since this was a couple of months ago).

Solutions?

- Remove folders from both ends, wipe the indexes

- Re-add folders, let them both re-hash/re-scan using large blocks

- Re-connect the two

Any other explanations/solutions you can think of?

So given your suggestion about large blocks this all starts to make sense. The files are identical but the blocks are not, as the files were scanned with different block sizes, hence syncthing redownloads.

You need to enable large blocks on both sides, nuke the database (potentially be removing and readdong folder, but if you have a single folder a full wipe might be easier), let them rescan and then share the folders with each other.

Since it is not possible to create a folder straight away with large blocks what is the best way forward to make sure that all files are scanned with large blocks?

Shouldn’t it re-scan the second you enable this feature???

Anyway, how can I verify that this is the case? Is there a way to query the database about a certain file/path and retrieve the stored blocks/hashes?

It could, but it generally isn’t necessary. The reason you run into this is that it’s the initial scan on existing data on more than one device, which means every file is in conflict to begin with. Generally that’s only a metadata conflict which gets resolved invisibly without downloading anything. Having different large blocks settings makes it a data conflict instead.

You can however just set the option (soon) after creating the folder. It’ll restart with the new setting without having had time to do much.

You can query file info with this REST call: https://docs.syncthing.net/rest/db-file-get.html

However, looking back at

# slave

-rwxrwxrwx+ 1 sc-syncthing root 2576384 Sep 27 2017 Marketing/Anzeigen/00_Anzeigen_Must Have.indd

MD5(Marketing/Anzeigen/00_Anzeigen_Must Have.indd)= 259a7dbcbdeb9029b19d585fbd94c81b

# master

-rwxrwxrwx+ 1 sadmin users 2576384 Sep 27 2017 Marketing/Anzeigen/00_Anzeigen_Must Have.indd

MD5(Marketing/Anzeigen/00_Anzeigen_Must Have.indd)= 259a7dbcbdeb9029b19d585fbd94c81b

this file is too small to be affected by any large block setting. “Large blocks” only kick in when the file is larger than 256 MiB. So while this may be part of it, it’s probably not 100% of whatever is going on in your setup.

Or you can setup folders, enable large blocks and then nuke the database, which will force things to start fresh.

Never the less, we could do a better job identifying that the files are the same just with different block sizes, which I think is github issue worthy.

So in my case, just shutdown Syncthing. Nuke the datebase. Restart Syncthing. It should re-scan/re-hash with the already enabled large blocks.

Will do so and report back. Do I need to open the Github issue?

You can, but it should be about more clever shortcuts when blocks mismatch.

OK, but before I open an issue is there a way to verify that this is actually happening? Some way to retrieve the blocks and hashes for a specific file/path?

Sadly no, I don’t think anything exposes hashes other than perhaps the database dump tool or a custom thing we could write.