I have encountered this problem today.

-

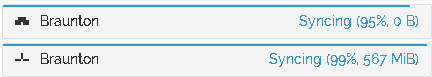

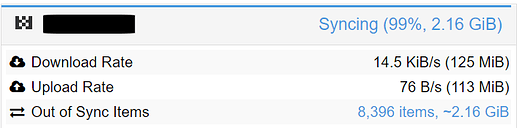

Device A (D4DZUGP) trying to push to Device C (TX7YPBI):

-

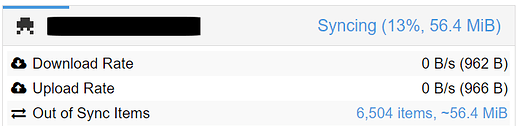

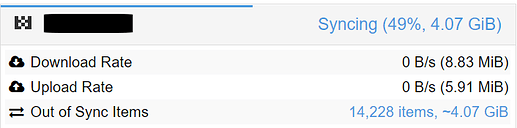

Device B (7LDIBZ4) trying to push to Device C (TX7YPBI):

-

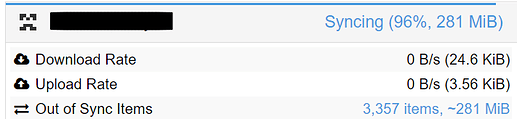

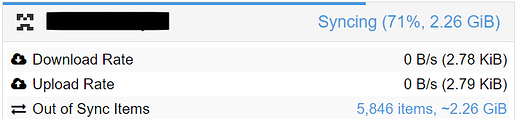

Device C (TX7YPBI):

I believe that this happened after upgrading the Syncthing binaries on Devices A and B. I have also tried to upgrade the binary on Device C today, but it has made no difference. Also, all three devices are running my self-compiled build, which is v1.12.0 with a few tweaks (see details).

I have compared a directory from one of the folders that are now stuck in sync, and this the result.

Device A:

{

"availability": [

{

"id": "7LDIBZ4-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX",

"fromTemporary": false

}

],

"global": {

"deleted": false,

"ignored": false,

"invalid": false,

"localFlags": 0,

"modified": "2020-11-10T00:21:13.2285041+09:00",

"modifiedBy": "SWPW3LH",

"mustRescan": false,

"name": "xxxxxxx",

"noPermissions": true,

"numBlocks": 0,

"sequence": 361637,

"size": 128,

"type": "FILE_INFO_TYPE_DIRECTORY",

"version": [

"SWPW3LH:1605597562"

]

},

"local": {

"deleted": false,

"ignored": false,

"invalid": false,

"localFlags": 0,

"modified": "2020-11-10T00:21:13.2285041+09:00",

"modifiedBy": "SWPW3LH",

"mustRescan": false,

"name": "xxxxxxx",

"noPermissions": true,

"numBlocks": 0,

"sequence": 361637,

"size": 128,

"type": "FILE_INFO_TYPE_DIRECTORY",

"version": [

"SWPW3LH:1605597562"

]

}

}

Device C:

{

"availability": [

{

"id": "D4DZUGP-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX",

"fromTemporary": false

},

{

"id": "7LDIBZ4-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX-XXXXXXX",

"fromTemporary": false

}

],

"global": {

"deleted": false,

"ignored": false,

"invalid": false,

"localFlags": 0,

"modified": "2020-11-09T15:21:13.2285041Z",

"modifiedBy": "SWPW3LH",

"mustRescan": false,

"name": "xxxxxxx",

"noPermissions": true,

"numBlocks": 0,

"sequence": 108916,

"size": 128,

"type": "FILE_INFO_TYPE_DIRECTORY",

"version": [

"SWPW3LH:1605597562"

]

},

"local": {

"deleted": false,

"ignored": false,

"invalid": false,

"localFlags": 0,

"modified": "2020-11-09T15:21:13.2285041Z",

"modifiedBy": "SWPW3LH",

"mustRescan": false,

"name": "xxxxxxx",

"noPermissions": true,

"numBlocks": 0,

"sequence": 108916,

"size": 128,

"type": "FILE_INFO_TYPE_DIRECTORY",

"version": [

"SWPW3LH:1605597562"

]

}

}

Do you have any idea what the culprit may be? I am guessing that I can “fix” the issue with STRECHECKDBEVERY=1s, but I would like to investigate a little bit first before doing so.

Just for the record, the actual local and global states on all three devices are exactly the same.

.

.

.

.