So the indexes have finished rebuilding:

Node B was started alone, indexes built and shut down - everything showing “up to date”

Node A was started alone, indexes built - everything showing “up to date”

Node B was then restarted and left to scan and up as needed

Much log activity for a while, and then nothing.

On Node B:

Some .tmp files observed created again but staying as .tmp files. This a grep of the the log for one of them - ~syncthing~DSC00966.ARW.tmp

[SVKBT] 2016/10/25 11:40:13.615936 sharedpullerstate.go:251: DEBUG: sharedPullerState RAW-Temp RAW-2015-12-15-Christmas-Show\DSC00966.ARW pullNeeded start -> 14

[SVKBT] 2016/10/25 11:40:13.659938 sharedpullerstate.go:251: DEBUG: sharedPullerState RAW-Temp RAW-2015-12-15-Christmas-Show\DSC00966.ARW pullNeeded start -> 15

[SVKBT] 2016/10/25 11:40:13.718942 sharedpullerstate.go:251: DEBUG: sharedPullerState RAW-Temp RAW-2015-12-15-Christmas-Show\DSC00966.ARW pullNeeded start -> 16

[SVKBT] 2016/10/25 11:40:13.745943 sharedpullerstate.go:251: DEBUG: sharedPullerState RAW-Temp RAW-2015-12-15-Christmas-Show\DSC00966.ARW pullNeeded start -> 17

[SVKBT] 2016/10/25 11:40:14.042960 progressemitter.go:63: DEBUG: progress emitter: timer - looking after 5

[SVKBT] 2016/10/25 11:40:14.042960 progressemitter.go:103: DEBUG: progress emitter: emitting map[string]map[string]*model.pullerProgress{“Nexus5-Camera”:map[string]*model.pullerProgress{“IMG_20161022_152218.jpg”:(*model.pullerProgress)(0xc047a1fbc0)}, “Movies-Temp”:map[string]*model.pullerProgress{“2016-09-13-Dancing\VID_20160928_Car170451.mp4”:(*model.pullerProgress)(0xc047a1fc00)}, “RAW-Temp”:map[string]*model.pullerProgress{“RAW-2015-12-15-Christmas-Show\DSC00966.ARW”:(*model.pullerProgress)(0xc047a1fb00)}, “PICS-Misc”:map[string]*model.pullerProgress{“Cycling\PANO_20160816_064724.jpg”:(*model.pullerProgress)(0xc047a1fb40)}, “PICS-Temp”:map[string]*model.pullerProgress{“2016-08-06-France-Holiday\IMG_20160723_164138.jpg”:(*model.pullerProgress)(0xc047a1fb80)}}

[SVKBT] 2016/10/25 11:40:14.108964 rwfolder.go:196: DEBUG: rwFolder/Movies@0xc0435a0000 remote index updated, rescheduling pull

[SVKBT] 2016/10/25 11:40:14.761001 sharedpullerstate.go:261: DEBUG: sharedPullerState Nexus5-Camera IMG_20161022_152218.jpg pullNeeded done -> 14

[SVKBT] 2016/10/25 11:40:15.483043 sharedpullerstate.go:261: DEBUG: sharedPullerState Nexus5-Camera IMG_20161022_152218.jpg pullNeeded done -> 13

[SVKBT] 2016/10/25 11:40:19.043246 progressemitter.go:63: DEBUG: progress emitter: timer - looking after 5

[SVKBT] 2016/10/25 11:40:19.043246 progressemitter.go:103: DEBUG: progress emitter: emitting map[string]map[string]*model.pullerProgress{“PICS-Temp”:map[string]*model.pullerProgress{“2016-08-06-France-Holiday\IMG_20160723_164138.jpg”:(*model.pullerProgress)(0xc04727e640)}, “Nexus5-Camera”:map[string]*model.pullerProgress{“IMG_20161022_152218.jpg”:(*model.pullerProgress)(0xc04727e680)}, “Movies-Temp”:map[string]*model.pullerProgress{“2016-09-13-Dancing\VID_20160928_Car170451.mp4”:(*model.pullerProgress)(0xc04727e6c0)}, “RAW-Temp”:map[string]*model.pullerProgress{“RAW-2015-12-15-Christmas-Show\DSC00966.ARW”:(*model.pullerProgress)(0xc04727e700)}, “PICS-Misc”:map[string]*model.pullerProgress{“Cycling\PANO_20160816_064724.jpg”:(*model.pullerProgress)(0xc04727e740)}}

Node B now shows some errors rolling in the log

[SVKBT] 2016/10/25 20:46:27.314484 progressemitter.go:82: DEBUG: progress emitter: nothing new

[SVKBT] 2016/10/25 20:46:32.314770 progressemitter.go:63: DEBUG: progress emitter: timer - looking after 5

[SVKBT] 2016/10/25 20:46:32.314770 progressemitter.go:82: DEBUG: progress emitter: nothing new

[SVKBT] 2016/10/25 20:46:37.315056 progressemitter.go:63: DEBUG: progress emitter: timer - looking after 5

[SVKBT] 2016/10/25 20:46:37.315056 progressemitter.go:82: DEBUG: progress emitter: nothing new

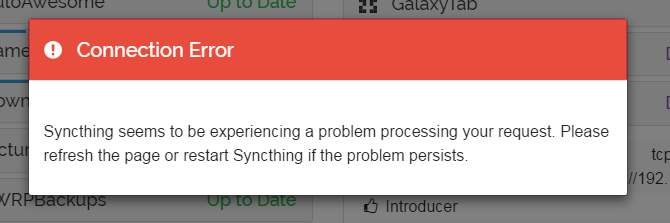

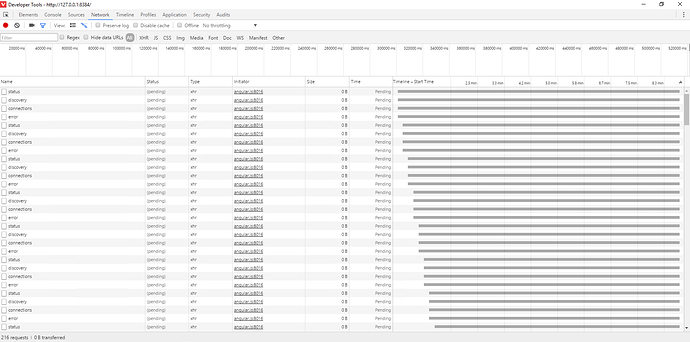

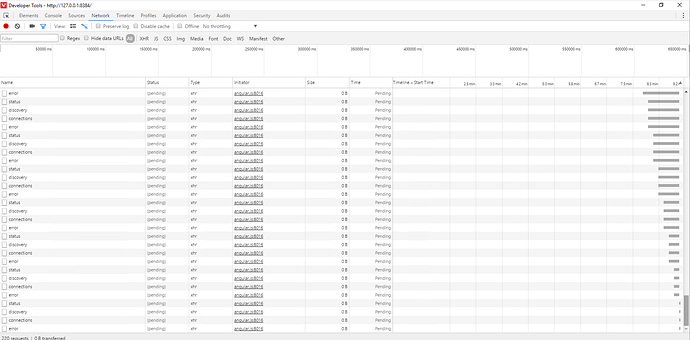

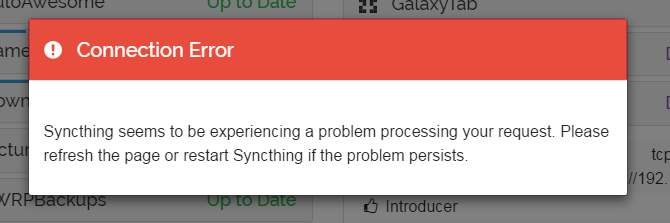

The web gui shows this error:

On Node A

The web gui shows everything up to date and all devices disconnected. I don’t see any open connections to Node B in netstat -an

Last contact time to Node B shows 11:29 (~11hrs ago) so I think something is forcing the two to disconnect. I can however telnet to port 22000 from each other so there’s nothing blocking them from talking. They both have 5/8 Discovery sources and 2/2 listeners. I believe the web interface on the Node A isn’t up to date though as it shows 9hrs uptime, but last restart was actually 10.5hrs ago.

These sort of messages scrolling through the log:

[R7DNA] 2016/10/25 22:39:29.633389 rwfolder.go:223: DEBUG: rwFolder/RAW-PICS@0x121be160 skip (curSeq == prevSeq) 0 true

[R7DNA] 2016/10/25 22:39:32.684412 rwfolder.go:223: DEBUG: rwFolder/PICS-Misc@0x121bed10 skip (curSeq == prevSeq) 0 true

On Node B

I restarted syncthing on Node B and there was action in the log as was rescanning. About half the folders are Out of Sync again since it completed.

The grep for DSC00966.ARW shows this now

syncthing.log:[SVKBT] 2016/10/25 22:45:07.147633 walk.go:252: DEBUG: temporary: RAW-2015-12-15-Christmas-Show~syncthing~DSC00966.ARW.tmp

syncthing.log:[SVKBT] 2016/10/25 22:45:58.361562 rwfolder.go:442: DEBUG: rwFolder/RAW-Temp@0xc043310000 handling RAW-2015-12-15-Christmas-Show\DSC00966.ARW

syncthing.log:[SVKBT] 2016/10/25 23:07:31.819543 rwfolder.go:442: DEBUG: rwFolder/RAW-Temp@0xc043310000 handling RAW-2015-12-15-Christmas-Show\DSC00966.ARW

syncthing.log:[SVKBT] 2016/10/25 23:08:01.591246 rwfolder.go:442: DEBUG: rwFolder/RAW-Temp@0xc043310000 handling RAW-2015-12-15-Christmas-Show\DSC00966.ARW

The log on Node B is showing similar contents to Node A

[SVKBT] 2016/10/25 23:27:20.765547 rwfolder.go:223: DEBUG: rwFolder/PICS-Misc@0xc04220a000 skip (curSeq == prevSeq) 2496 true

[SVKBT] 2016/10/25 23:27:20.906555 rwfolder.go:223: DEBUG: rwFolder/PICS-Temp@0xc043472640 skip (curSeq == prevSeq) 589 true

On Node A

The log now shows these messages since the rescan of Node B finished

[R7DNA] 2016/10/25 23:29:24.282047 progressemitter.go:63: DEBUG: progress emitter: timer - looking after 1

[R7DNA] 2016/10/25 23:29:24.282047 progressemitter.go:82: DEBUG: progress emitter: nothing new

[R7DNA] 2016/10/25 23:29:29.286170 progressemitter.go:63: DEBUG: progress emitter: timer - looking after 1