Hello,

We are using syncthing in a rather unconventional way, where we have one “central” hub and about 100 other devices. No synchronisation is going on between the devices except from “central hub” to device and vice versa. In total we have around 1000 folders synchronising, on average about 6 folder per device with some extra folders for some devices.

Everything is running on Windows Server 2016.

What we can see is that when we open the GUI on the central hub, it takes around 10-15 minutes where all folders are in the state “Unknown” and maybe another 10-15 minutes (on a normal day) before all folders have their “correct” status.

We can not see anything in terms of CPU Usage, RAM Usage or Disk Usage that should be an issue, CPU is usually around 20%, RAM about 20% used by Synchting, total 50% RAM usage and average response time on Disks (for syncthing.exe) are 1-5ms.

The other unconventional part about our setup is that the average response time to the devices are around 900ms, as the devices are remote without normal connection possibilities.

Lastly, all the files that we are synchronising are on an Azure storage so the access time that way are a bit slower than having the files on the local machine.

On the central hub we are using Syncthing 1.10.0 and on the remote devices it is currently using 1.3.4 (will be upgraded rather soon).

Do you have any ideas as to how I can increase the performance on the central hub? We are currently using Watch for Changes on all folders, which may be the culprit but it is rather important that files are transferred as soon as possible instead of on a schedule.

I would gladly get some logs, but I don’t fully understand all the flags available and since the GUI is so incredibly slow it takes very long time just to get the settings or advanced settings popup to show.

If there is anything else needed from me, please tell me.

Thanks in advance

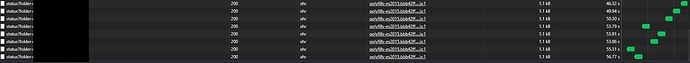

Edit: One more thing, when I tried to do the API-call to /rest/db/status with a folder as parameter, I left it running for 5 minutes without getting a response whatsoever.