Care to try the the latest development snapshot, linked above? I reverted what I think was the problem in the leveldb library…

Sorry, can you give me the link? I can’t find it.

http://build.syncthing.net/job/syncthing/lastSuccessfulBuild/

it’s linked above the headline of the thread

(Thanks for the link, I was looking at the comments, hehe)

Results after testing the devel snapshot v0.9.5+32-gdab0aec (linux-amd64):

- A first (strange) run started with 1.5GB (right from the start), and was reaching 1.8GB when I tried to close it because it was using too much CPU (3 cores for about 25 minutes). The shutdown from the GUI didn’t work, so I had to kill it.

- A second run started with 220MB, reached 1.4GB during the scan and stabilized there (no more wild CPU usage, but kept the RAM occupied). [update] I left this running, and now the RAM usage dropped to 280MB.

It’s rehashing files. There’s a bug here that a request to exit during this doesn’t “take”, until the scanning is done.

Forgive me if this has already been discussed, but for large indexes say larger than 5 mb, might it not make sense to calculate something like the md5 hash for the index on all members of the swarm and should a discrepancy be found then initiate the transfer of the index to the member that registers a hash inconsistent with the global state index hash?

Comparing hashes would utilize a minimum of bandwidth to compare. Rather than transferring full indexes on each connection as a rule.

Welcome to the forum!

Sorry for the late reply. It is planned to send only the index-delta, (if any):

Maybe now, with the version vectors it might become easier or is more likely to be implemented by the developers.

Dear developers, first of all I want to thank you for this great program!

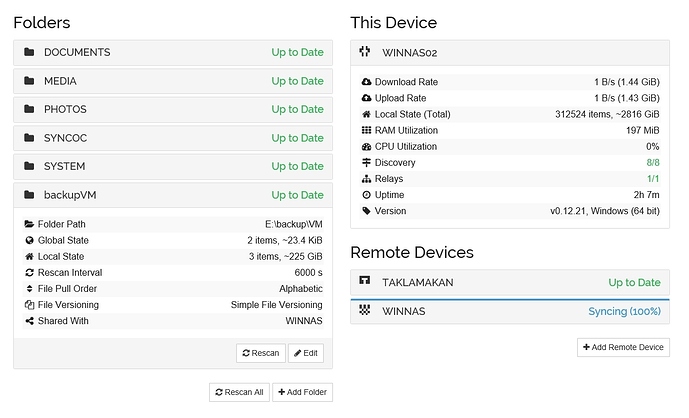

I am using it to sync a LOT of files (312524 files, 2.816 TB - see Screenshot - WINNAS02 is the source, WINNAS is the target). Recently I wanted to sync the backup of my VMs, but since the files are quite big (>230 GB) Syncthing won’t transmit them. Is there any way I can force Syncthing to transfer files >230GB?

Thanks infafk

edit rumpelsepp: screenshot added, just to prevent it disappears somewhere in the future from $secure_image_hoster.

Syncthing can only handle files up to 130GB at the moment. The limit has already been increased, but I don’t know which version will get this change.

Yes, sorry about that. Will be fixed in v0.13.

Great, thank you very much! I am looking forward to syncing my virtual machine backups

As a general though on this, I’m not sure Syncthing is the best tool to use here. As I see it, Syncthing has a few “killer” use cases that make sense;

-

Bidirectional syncing, where there’s more than one machine making changes it all should get a consistent view of the folder.

-

“Real time” syncing, where changes to files are continuously synced to somewhere else.

-

“Cluster” syncing where several devices can help each other get in sync, reducing the overall amount of traffic sent from one host.

For your virtual machine backups you’ll get neither, with the possible exception of the last if you have multiple backup destinations that can sync with each other. The disk images need to be quiescent to be successfully synced, and hashing those large files is going to take a long time on every change. Syncthing needs to keep the full block list in RAM while doing that which will be a significant amount of memory for these large files.

It sounds like rsync would be vastly more resource efficient while accomplishing the same thing.

As I understand it, he has already a backup of the VMs, which he wants to sync. He doesn’t want to sync live VMs.

Rsync (or something like that) would still be the better solution, as @infafk wants a one way sync between 2 machines and the backups are (probably) already created on a schedule, so a scheduled sync with rsync makes much more sense.

Thanks for your replies! The VMs are indeed backups, not running machines. I want to use Syncthing because I will have a lot of VMs soon on 5-7 hosts. Most of the machines have a similar layout. With Syncthing the traffic should be massively reduced because of the similarities between the VMs. Additionally there are two locations that should be in sync: 2-3 Syncthing hosts in one place, 3-4 Syncthing hosts in the other. This network of 5-7 hosts makes Syncthing a much better alternative than rsync.

Furthermore I just need to maintain one syncing system instead of two… The only downside (so far) was the maximum file size. BTW: How much will you be increasing it?

The new limit will be 10 times the current, so 1310 GB.

Haha great, that will be enough for me I guess

This topic was automatically closed 30 days after the last reply. New replies are no longer allowed.